Kling AI's latest models—kling-video-o1/image-to-video, kling-video-o1/video-edit, and video-edit-fast—offer advanced video generation capabilities suitable for a wide range of technical applications.

When integrating the official Kling API, however, teams need to account for several operational constraints, including the required Kling deposit, the fixed throughput tiers, and the standard Kling concurrency limits. These factors affect how the models can be incorporated into production workloads.

Access to the Kling O1 series typically begins with a minimum deposit of 10,000 RMB (~$1,500 USD). Higher throughput levels require larger commitments—often above 30,000 RMB—and, under the standard tier, the official API allows only 5 concurrent requests (QPS). For systems that depend on batch processing, multi-user workflows, or time-sensitive automation, a 5-QPS ceiling can be a meaningful architectural limitation.

For SaaS platforms, content automation tools, and video-centric applications, understanding these limits early helps determine whether the official Kling API can support expected traffic patterns.

This guide outlines how developers can work with Kling AI models—including image-to-video, video editing, and fast transformation paths—and how different access layers or high-concurrency API routes may affect deployment choices depending on system requirements.

Let's begin.

The Complete Kling AI Model Landscape: What Can You Build?

To understand the full value of Kling AI and why it has become a breakthrough in AI video generation, it's essential to look at the complete Kling O1 model ecosystem. Many developers only associate Kling with basic text-to-video generation, but the reality is that the Kling API provides a multi-stage video production suite—covering creation, animation, editing, and high-speed transformation.

Below is a comprehensive breakdown of the core Kling O1 video model capabilities, including how each model fits into modern SaaS tools, content automation pipelines, and production workflows.

1. Text-to-Video (The Creative Engine)

- What it is: The foundation of the Kling video generation ecosystem. This model converts simple text prompts into complex cinematic video scenes (e.g., "a cinematic drone shot of Tokyo at night").

- The Reality: Great for ideation and creative brainstorming, but it often lacks the precision required for brand-controlled assets or strict visual consistency.

2. Image-to-Video (The Precision Tool)

- Model: kling-video-o1/image-to-video

- Why it matters: This is where B2B and SaaS applications see massive ROI. Instead of relying on random generation, you upload a specific reference image (a product photo, avatar, 3D render, or character design) and generate consistent animation.

- Best for:

- E-commerce product showcases

- NFT or digital avatar animation

- Brand-consistent storytelling

- Automated marketing content

This is one of the most in-demand image-to-video models in the market, especially for high-volume workflows with high concurrency AI API requirements.

3. Video Editing & Transformation (The VFX Suite)

- Model: kling-video-o1/video-edit

- What it is: A breakthrough in AI-assisted video editing. Developers can modify existing footage—adjust backgrounds, apply stylistic changes, or replace visual elements—without reshooting.

- Best for:

- Post-production automation

- Localization workflows

- Multi-version video campaigns

- Creator tools and video platforms that require rapid turnaround

This model is one of the core strengths of the Kling API because it moves beyond generation into true VFX-style transformation.

4. High-Speed Editing (The Real-Time Solution)

- Model: kling-video-o1/video-edit-fast

- What it is: A low-latency optimized version of the editing model designed for fast response times.

- Best for:

- User-facing apps

- Real-time video filters

- Rapid prototyping

- High-interaction creative tools

In high-traffic environments, this model benefits significantly from EvoLink's high concurrency AI API, ensuring stable performance even under load.

How to Access Kling O1: The Two Integration Paths

When integrating Kling AI and its Kling O1 video generation models into your application, you have two fundamentally different paths. Each path comes with its own trade-offs in terms of budget, deposits, scalability, and the ability to bypass the strict Kling deposit and Kling concurrency limit constraints that developers frequently encounter.

Let's examine both options objectively.

Path 1: Direct Integration via the Official Kling API

This is the "enterprise route." If your organization has a large procurement budget and requires direct relationships with model providers, the official Kling API can be a valid choice. But it comes with significant operational overhead:

The Hurdle — Large Deposit Requirement

To obtain an official Kling API key, developers must pre-deposit 10,000 RMB (~$1,400 USD) upfront. Higher concurrency tiers require 30,000 RMB or more, which becomes a barrier for startups and independent developers.

The Bottleneck — Severe Concurrency Limits

Even after meeting the minimum deposit, the standard tier caps you at 5 concurrent requests (QPS). This Kling concurrency limit makes it nearly impossible to scale SaaS products, video tools, or high-traffic APIs.

In short, the official path is optimized for traditional enterprises—not developers who need rapid iteration or flexible usage-based billing.

Path 2: The Production Layer via EvoLink (High-Concurrency AI API)

EvoLink functions as a production-grade abstraction layer on top of enterprise Kling infrastructure. We aggregate high-tier quotas (including high QPS pools) and convert them into a pay-as-you-go, no-deposit model—providing the same Kling O1 performance without financial barriers.

What EvoLink Provides

- No Deposit Required — Zero upfront cost.

- High Concurrency — Enterprise-grade throughput from Day 1.

- OpenAI-Compatible API — Same structure as OpenAI’s Chat Completions format.

- Unified Billing — All models under one consolidated bill.

- Same Model Quality — 100% identical to official Kling O1 models. Developers get all the upside of the Kling ecosystem while bypassing the deposit system, QPS caps, and signature-based authentication.

Comparison Table: Official Kling API vs. EvoLink API

| Feature | Official Kling API | EvoLink API |

|---|---|---|

| Upfront Cost | ¥10,000+ Deposit | $0 (Pay-as-you-go) |

| Concurrency | 5 QPS Cap (Standard Tier) | High Concurrency (Enterprise Pool) |

| API Format | Proprietary / AK-SK Auth | OpenAI-Compatible |

| Billing | Pre-paid deposits | Unified usage-based billing |

| Model Quality | 100% Original Kling O1 | 100% Original Kling O1 |

3 Minutes to Production: The Integration Guide

Different video-generation providers often use their own authentication schemes or SDK structures, which can introduce additional setup steps for teams managing multiple models. To streamline this, all models accessed through a standardized interface—including the kling-o1-video-edit family—use the same request format: Bearer token authentication and a unified JSON input body. This allows Kling O1 to be used alongside other video and image models without learning separate SDKs or signature rules.

Below is an example request for the kling-o1-video-edit model:

{

"model": "kling-o1-video-edit",

"prompt": "Make the video more cinematic",

"video_urls": [

"https://example.com/original-video.mp4"

],

"image_urls": [

"https://example.com/reference.jpg"

]

}Why Developers Love This Structure

- Zero Learning Curve — No proprietary parameters.

- Scalable Architecture — video_urls and image_urls accept arrays for batch operations.

- Standard Bearer Auth — No cryptographic signing required.

- High Concurrency by Default — Pair this structure with EvoLink's high concurrency AI API, and you can immediately scale generation workloads.

From Experiment to Enterprise: 3 High-Value Use Cases

Accessing the Kling AI models is only the starting point. The real impact appears when these models are embedded into repeatable workflows and can run at scale—without being constrained by the official Kling concurrency limits or the 5-QPS ceiling. In a high-concurrency setup (for example, through an aggregation layer like EvoLink), Kling O1 can support production-grade video pipelines instead of remaining a one-off experiment.

Below are three representative use cases that show how Kling O1 fits into real-world systems.

1. The E-Commerce Content Engine (Image-to-Video)

Large online stores often manage tens of thousands of SKUs. Most products only have static images, and shooting bespoke video for each one is economically unrealistic.

Use kling-video-o1/image-to-video to build an automated pipeline that takes catalog images and generates short (3–5 second) product showcase clips for TikTok, Instagram Reels, or PDP pages. This turns existing image assets into lightweight video content at scale.

When paired with a high concurrency AI API, the pipeline can process large SKU batches in parallel—rather than queueing jobs one by one under a 5-QPS limit from the official Kling API. For teams running nightly or hourly batch jobs, this difference directly translates into how many products can realistically be covered.

2. AI Video Editor for Creators (Video Edit)

Individual creators and SaaS tools serving them need to adapt video styles quickly to match trends. Manual editing for each variation is slow and doesn't scale well with daily content output.

Integrate kling-o1-video-edit into your product workflow. A user uploads a vlog or short clip and specifies a target style—e.g., "90s VHS tape," "cyberpunk neon," or "more cinematic." The model applies the transformation directly on the source footage.

In multi-tenant SaaS environments where many users queue edits at once, relying solely on the standard official tier of the Kling API can create latency spikes and backlog. Running Kling O1 behind an aggregation layer that supports higher concurrency and queue management helps keep response times predictable as usage grows.

3. Automated Social Media Scaling

Brands that publish across TikTok, YouTube Shorts, Instagram, and other channels need a steady stream of short-form video. Manual production for every post quickly becomes a bottleneck.

Combine a script generator (e.g., GPT-4) with Kling O1 video generation to build an automated content engine:

- generate a short script or storyboard,

- turn it into a basic visual plan,

- call the Kling AI video models to produce final clips,

- hand them off to a scheduler for distribution.

For daily or hourly automation, the difference between a 5-QPS cap and a high-concurrency AI API backed by pooled capacity is not just theoretical—it determines whether the pipeline can clear the day's workload on time or continually fall behind.

Conclusion: Don't Let Gatekeepers Stop Your Innovation

The Kling O1 video models represent a massive leap forward in AI generation. They are arguably the best video models available publicly today. However, the official barriers to entry — the $1,500 deposit and the crippling 5-concurrency limit—are designed for massive corporations, not for agile developers and startups. EvoLink exists to bridge this gap. We believe you shouldn't have to mortgage your house to test an API, and you shouldn't have to wait in line to generate your videos.

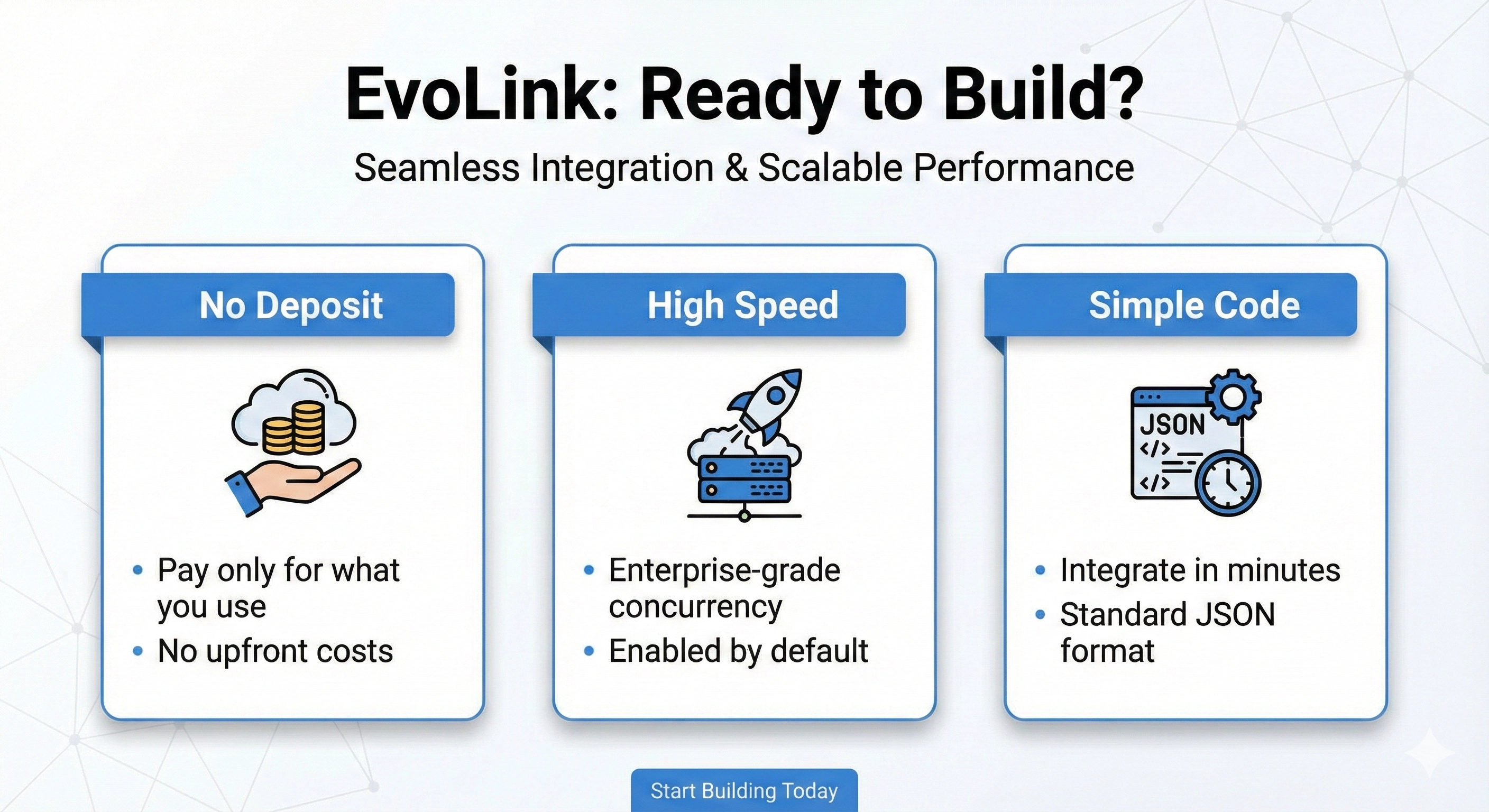

Get Your EvoLink API Key Now

Ready to build?

- No Deposit: Pay only for what you use.

- High Speed: Enterprise-grade concurrency enabled by default.

- Simple Code: Integrate in minutes with standard JSON.

Frequently Asked Questions (FAQ)

1. How do I optimize KlingO1 for low-latency applications?

While techniques like prompt engineering and reducing response length can help, the most significant factor is the infrastructure serving the model. Using a service like EvoLink.ai with built-in load balancing and performance routing provides a more stable latency profile than direct API calls, which is critical for latency-sensitive applications.

2. What are the rate limits for the KlingO1 API?

Official rate limits vary by provider and the payment tier you are on. This fragmentation is a common challenge. With EvoLink, you benefit from our high, unified rate limits, which are designed for production-scale applications from day one.

3. How does KlingO1 handle concurrency?

Like most LLMs, managing concurrent requests effectively is an infrastructure challenge. Directly hitting the provider's API can lead to throttling. EvoLink's platform is built to handle high concurrency, managing a queue of requests and scaling resources to meet demand without you needing to build that logic yourself.

4. Is KlingO1 suitable for real-time applications?

It depends on your definition of "real-time." For applications that can tolerate a response time of 1-2 seconds, it can work well, especially with the performance stability provided by EvoLink. For applications requiring sub-second responses, it may not be the best choice without further fine-tuning or optimization.

5. How can I reduce costs when using KlingO1 at scale?

Cost reduction at scale comes from two primary sources: better pricing and smarter routing. EvoLink provides access to wholesale pricing and volume discounts. Additionally, you can implement logic to route simpler queries to cheaper, faster models while reserving KlingO1 for tasks that truly require its power, all through our single API endpoint.

6. What are the best alternatives to KlingO1?

If you're evaluating alternatives to KlingO1 for production video workflows, consider comparing it with other state-of-the-art generative video models such as:

- Sora 2 — known for long-duration coherent scenes and strong physical consistency

- Veo 3.1 — optimized for cinematic motion, smooth camera paths, and high-resolution output

- Wan 2.5 — strong at stylized generation and complex scene composition

- Runway Gen-3 Alpha

- Luma Dream Machine

- Pika Labs

- Stable Video Diffusion

Each engine offers different advantages: some excel at dynamic camera motion, others at fine-grained detail, others at rapid iteration speed or editing-first workflows.

For most teams, the best strategy is to evaluate several models in parallel. Using an abstraction layer that supports multiple video-generation engines makes this easier—you can swap models or run A/B tests without restructuring your codebase. This approach ensures your pipeline remains flexible as new video models emerge.