OpenRouter vs liteLLM vs Build vs Managed: Choosing an LLM Abstraction Strategy

OpenRouter vs liteLLM vs Build vs Managed

As LLM usage grows, many teams reach the same inflection point:

"Direct APIs are no longer enough—but what should sit in between?"

At that stage, the question is rarely whether to introduce an abstraction layer. The real question is which abstraction strategy fits your constraints.

This article compares four common approaches:

- OpenRouter

- liteLLM

- Building an in-house gateway

- Using a managed gateway

The goal is not to rank tools, but to clarify boundaries, trade-offs, and decision criteria.

The Four Approaches, Defined Clearly

Before comparing, it helps to define what each option actually represents.

OpenRouter

A hosted routing layer that aggregates access to many LLM providers behind a unified API surface.

Teams typically use it to:

- Access multiple models quickly

- Avoid managing individual provider contracts

- Experiment across providers with minimal setup

liteLLM

An open-source proxy that teams deploy and operate themselves.

It is often used to:

- Normalize API schemas

- Implement basic routing or fallback

- Retain control over infrastructure and data paths

Build (In-House Gateway)

A custom abstraction layer designed, owned, and operated by the team.

Common motivations include:

- Full control over behavior and contracts

- Deep integration with internal systems

- Custom reliability, policy, or cost logic

Managed Gateway

A hosted abstraction layer operated by a third party.

Teams typically choose this when:

- Infrastructure ownership is not a core competency

- Reliability, observability, and governance matter

- Time-to-production is critical

What These Options Optimize For

Each approach optimizes for different constraints, not different levels of "quality".

- Speed of access to many models

- Low operational overhead

- Experimentation and breadth

- Control over deployment

- Open-source flexibility

- DIY infrastructure workflows

- Maximum customization

- Tight integration with internal systems

- Explicit control over contracts and behavior

- Reduced operational burden

- Production-grade reliability and observability

- Clear abstraction boundaries

Understanding what each option optimizes for matters more than feature checklists.

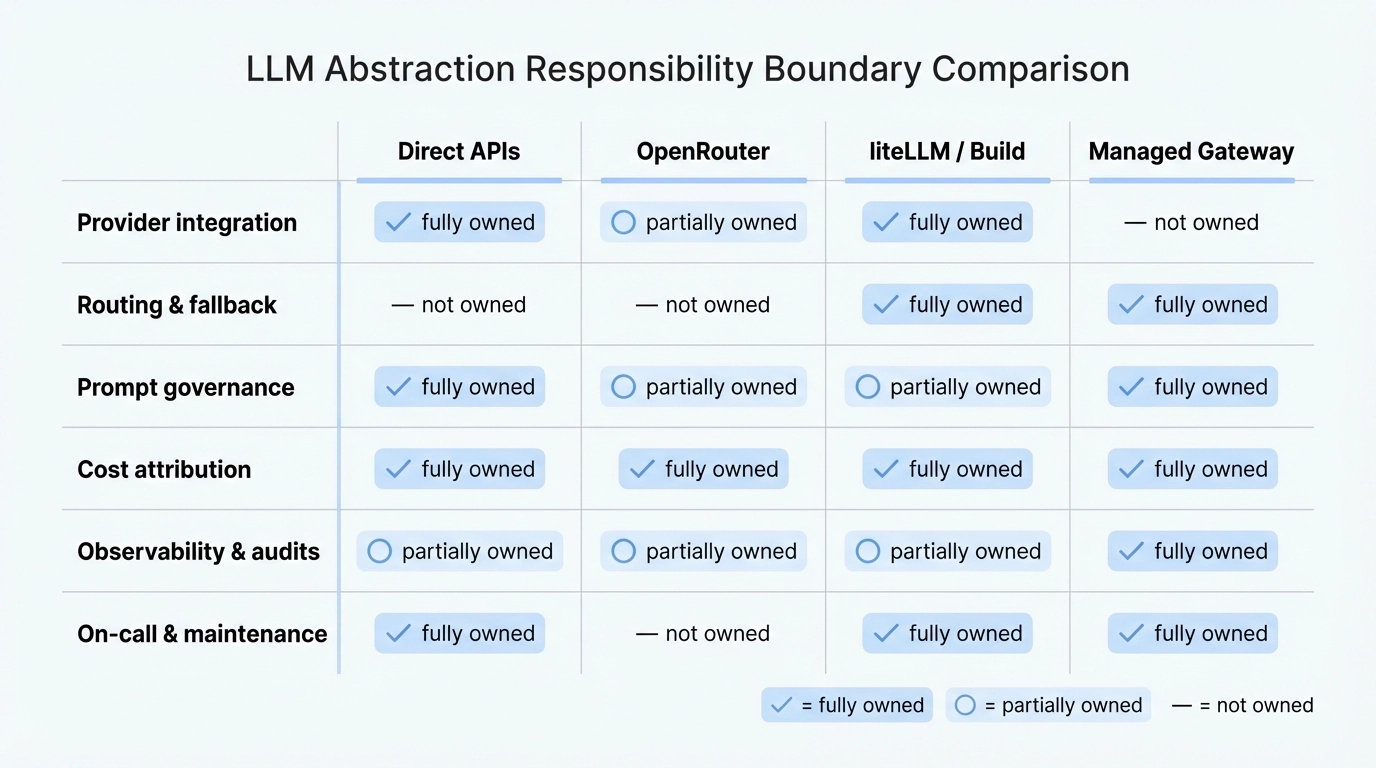

A Practical Comparison

| Dimension | OpenRouter | liteLLM | Build | Managed |

|---|---|---|---|---|

| Setup speed | Very fast | Moderate | Slow | Fast |

| Operational ownership | External | Internal | Internal | External |

| Custom behavior | Limited | Moderate | Full | Moderate–High |

| Observability & audits | Platform-defined | DIY | DIY | Built-in |

| Multi-team scaling | Moderate | Difficult | Difficult | Easier |

| Long-term maintenance | Low | Ongoing | High | Low–Moderate |

This table is not a recommendation. It highlights where cost and complexity accumulate.

When Each Option Tends to Make Sense

OpenRouter is often a good fit when:

- You need broad model access quickly

- You want to minimize infra investment

- Usage is exploratory or non-critical

- Provider churn is expected

liteLLM is often chosen when:

- You want open-source control

- You are comfortable running infra

- Requirements are still evolving

- Governance and observability are secondary

Building makes sense when:

- LLMs are core to your product

- Contracts, policies, and SLAs are non-negotiable

- You have infra expertise and long time horizons

- The abstraction itself is a competitive advantage

Managed gateways tend to work when:

- Reliability and auditability matter

- Multiple teams depend on LLMs

- You want clear operational guarantees

- You prefer buying infrastructure over staffing it

The Hidden Costs Teams Often Miss

Most teams focus on API compatibility. They underestimate organizational and operational costs.

Commonly overlooked factors include:

- On-call ownership for the abstraction layer

- Debugging cross-provider failures

- Maintaining evaluation baselines for routing decisions

- Aligning policy changes across teams

- Keeping cost attribution accurate over time

These costs tend to surface after abstraction is introduced, not before.

A Decision Checklist

If you answer "yes" to several of the following, your choice matters more than the tool itself:

- Do multiple teams depend on the abstraction layer?

- Are reliability guarantees becoming explicit?

- Do policy or prompt changes require coordination?

- Is cost attribution needed beyond total spend?

- Would failures impact critical user flows?

If not, lighter-weight options may remain sufficient.

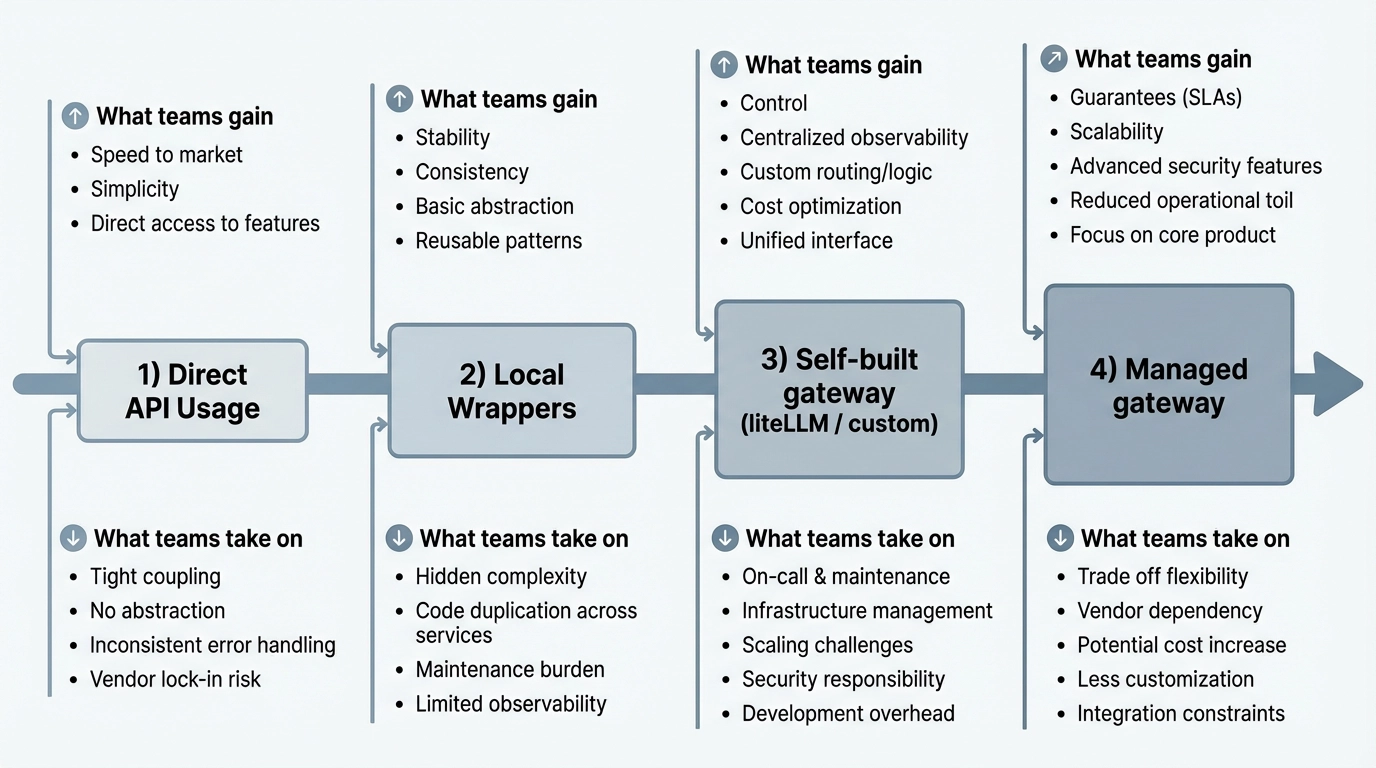

How This Fits the Broader Architecture Path

In practice, teams often move through these stages:

- Direct APIs

- Local wrappers

- Centralized abstraction

- Explicit gateway strategy

The transition is not about scale alone. It is about coordination cost and risk tolerance.

Different teams stop at different points—and that is expected.

In Practice: What “Model Access Through an Abstraction” Looks Like

In practice, the abstraction choice determines how application code references models:

- either by binding directly to provider-specific identifiers, or

- through a stable internal naming layer (e.g. general-purpose LLM, long-context LLM), which is mapped to concrete models behind the scenes.

For a concrete example of what a “model reference” page looks like in this pattern:

- Model reference example: GPT-5.2 model page

Closing Thought

There is no universally "correct" abstraction strategy.

Each option represents a trade-off between control, speed, and responsibility.

The real mistake is choosing based on surface features instead of understanding:

- What complexity you are taking on

- What guarantees you expect

- Who will own the consequences

👉 Next Step

this guide breaks down when direct APIs still work—and when a gateway starts paying for itself.

FAQ

Is OpenRouter a gateway or just a router?

It functions as a hosted routing layer, optimized for access and breadth rather than deep organizational governance.

Is liteLLM enough for production?

It can be, depending on how much infrastructure, observability, and operational discipline a team is willing to provide.

Why not always build in-house?

Building offers control, but also creates long-term maintenance and staffing costs that many teams underestimate.

When does a managed gateway make sense?

When abstraction has become infrastructure, and reliability and governance outweigh the desire for full control.