Gateway vs Direct APIs: When Each Makes Sense

What an LLM Gateway Actually Does

An LLM gateway is an intermediary layer that centralizes decisions your application would otherwise make repeatedly.

In practice, gateways often handle:

- Behavioral normalization across providers

- Routing, fallback, and model selection logic

- Prompt and policy enforcement

- Usage tracking and cost attribution

- Observability, auditing, and guardrails

The key difference is not capability, but intent.

Gateways are designed as infrastructure. Direct APIs are consumed as dependencies.

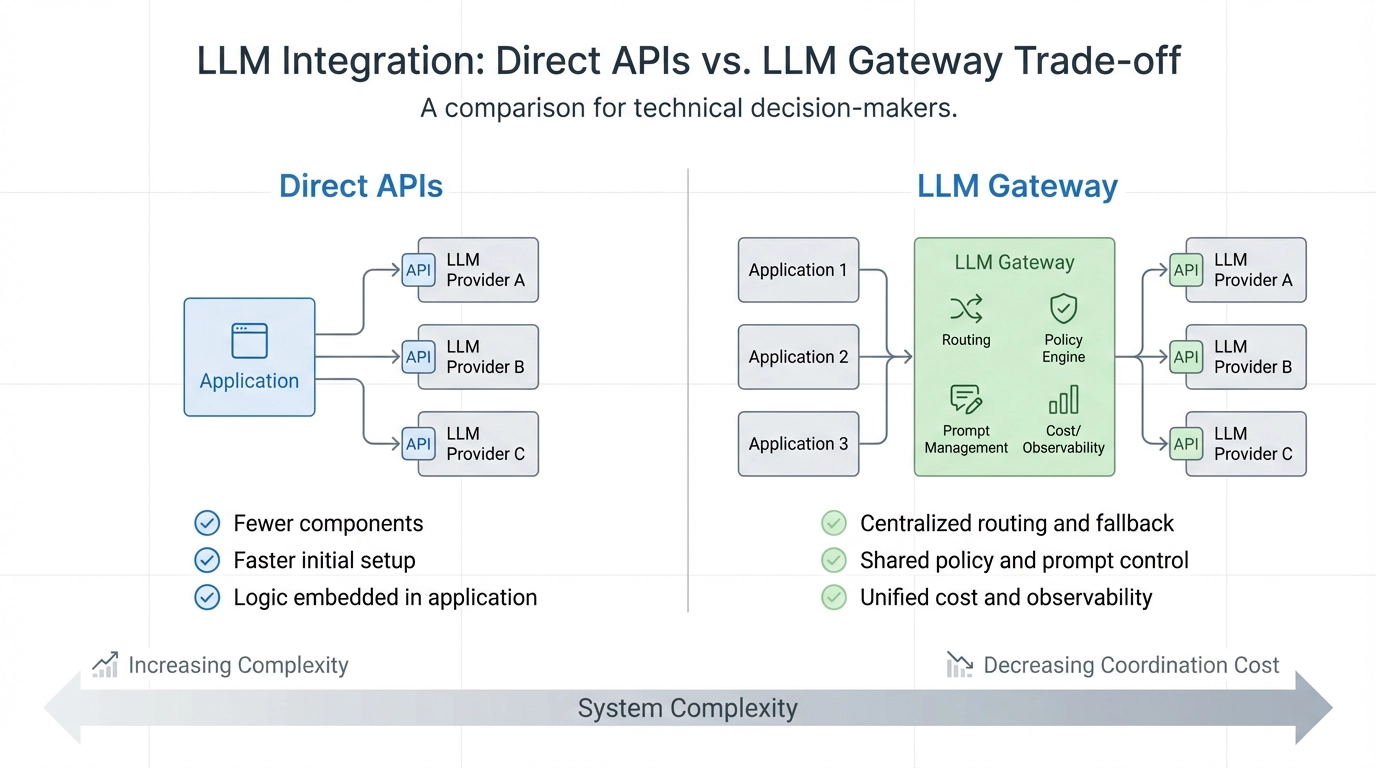

The Core Trade-off: Simplicity vs. Centralized Control

The decision between direct APIs and a gateway is not binary. It is a trade-off between local simplicity and system-level control.

- Minimal abstraction

- Lower initial complexity

- Faster iteration in small teams

- Consistency across services

- Explicit reliability guarantees

- Centralized cost and policy control

Neither approach is inherently better. Each becomes costly under the wrong conditions.

When Direct APIs Are Usually the Right Choice

Direct integration often makes sense when:

- You rely on a single provider or model family

- One team owns the entire LLM surface

- Failures are non-critical or easily tolerated

- Cost tracking does not need to be granular

- Prompt changes are localized and infrequent

In these scenarios, adding a gateway can introduce more overhead than value.

Abstraction too early can slow teams down.

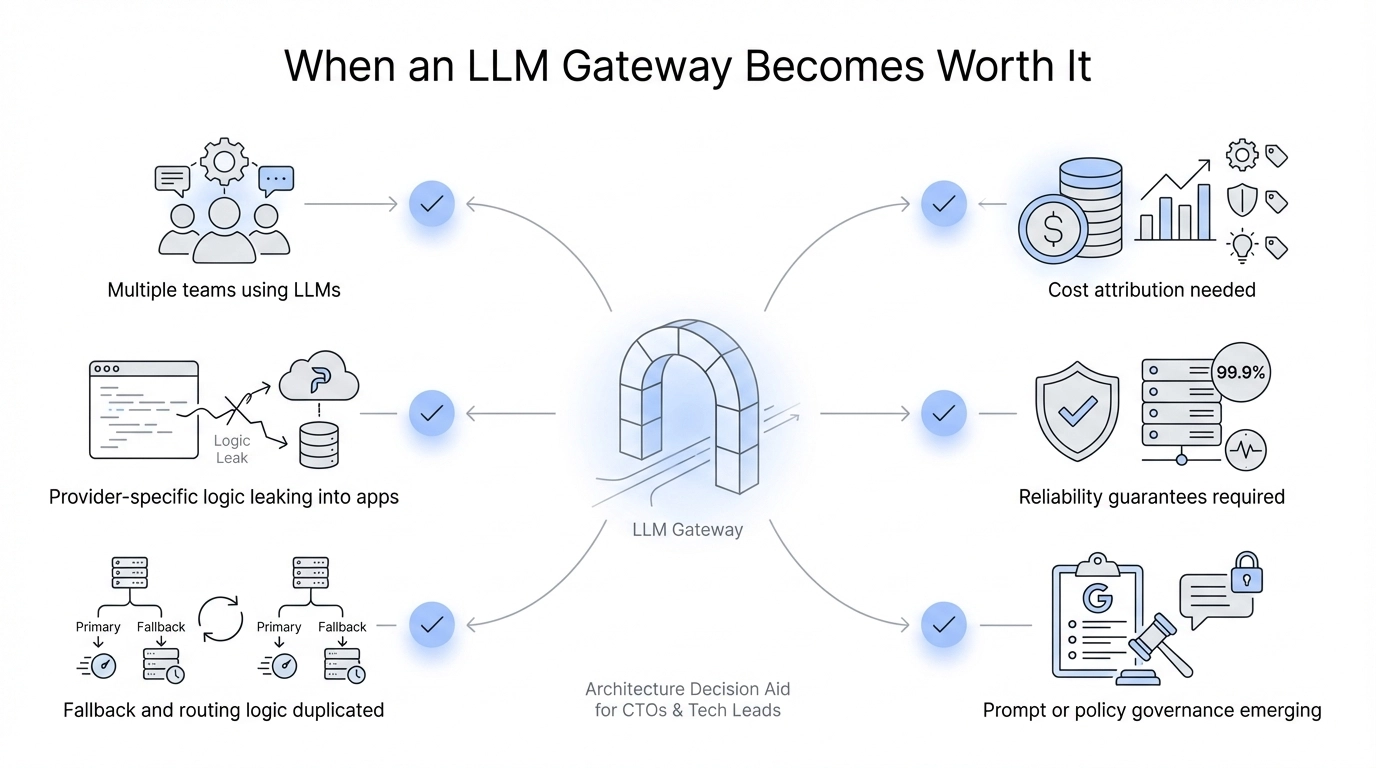

When a Gateway Starts Paying for Itself

Gateways tend to justify their cost when complexity crosses certain thresholds.

Common signals include:

- Multiple teams or services depend on LLMs

- Provider-specific behavior leaks into product code

- Routing or fallback logic is duplicated

- Prompt governance or policy enforcement becomes necessary

- Cost or usage needs to be attributed beyond "total spend"

- Reliability guarantees start to matter

At this point, the gateway is no longer "extra infrastructure." It becomes a way to reduce coordination and cognitive load.

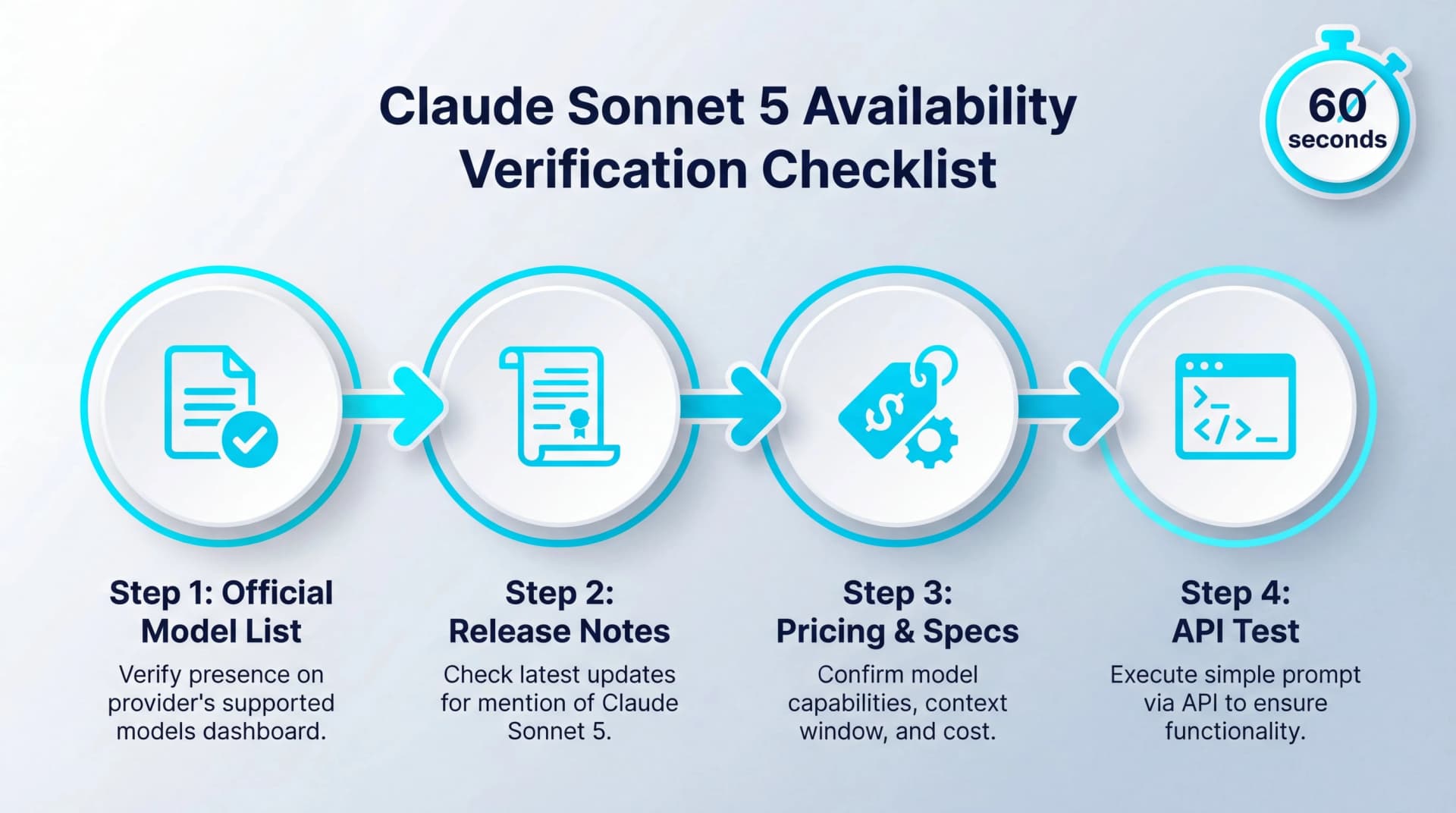

A Decision Checklist

If you answer "yes" to three or more, a gateway is likely worth evaluating:

- Do multiple services implement similar retry or fallback logic?

- Are provider-specific behaviors leaking into application code?

- Do prompt or policy changes require coordinated deployments?

- Is cost attribution needed at the feature or team level?

- Would a provider outage affect multiple critical paths?

If not, direct APIs may still be the simpler—and better—option.

Direct APIs vs Gateway: A Practical Comparison

| Dimension | Direct APIs | Gateway |

|---|---|---|

| Setup cost | Low | Higher |

| Early iteration speed | High | Moderate |

| Multi-provider support | Manual | Centralized |

| Reliability guarantees | Ad hoc | Explicit |

| Cost visibility | Fragmented | Unified |

| Governance & policy | Scattered | Centralized |

| Organizational scaling | Difficult | Easier |

This is not a maturity ladder. It is a context-dependent choice.

👉 Next Step

the harder decision becomes which abstraction strategy fits your constraints—hosted routing, self-hosted proxy, in-house build, or managed.

Common Anti-Patterns

Teams often struggle when they:

- Introduce a gateway without clear ownership

- Treat a gateway as a "thin proxy" but expect infra-level guarantees

- Route traffic dynamically without evaluation baselines

- Centralize control without adding observability

A gateway amplifies both good and bad design decisions.

How This Connects to Wrappers

Most gateways do not appear fully formed.

They evolve from wrappers that:

- Accumulate retries and routing logic

- Centralize prompts and policies

- Become dependencies for multiple teams

The difference is intentional design.

Wrappers emerge reactively. Gateways are built deliberately.

Closing Thought

Direct APIs are not a shortcut. Gateways are not over-engineering.

They are tools optimized for different stages of system complexity.

The real mistake is not choosing the wrong one—it is failing to recognize when the trade-offs have shifted.

Understanding that inflection point is what turns LLM integration from ad hoc code into sustainable infrastructure.

FAQ

Do all teams need an LLM gateway?

No. Many teams operate successfully with direct APIs, especially when scope and risk are limited.

Is a gateway always more reliable than direct APIs?

Not by default. Reliability depends on how the gateway is designed and operated.

Can a wrapper replace a gateway?

Wrappers can handle many of the same concerns initially, but often lack the governance and observability expected of infrastructure.

When is it too early to add a gateway?

When it adds coordination cost without reducing operational risk or complexity.