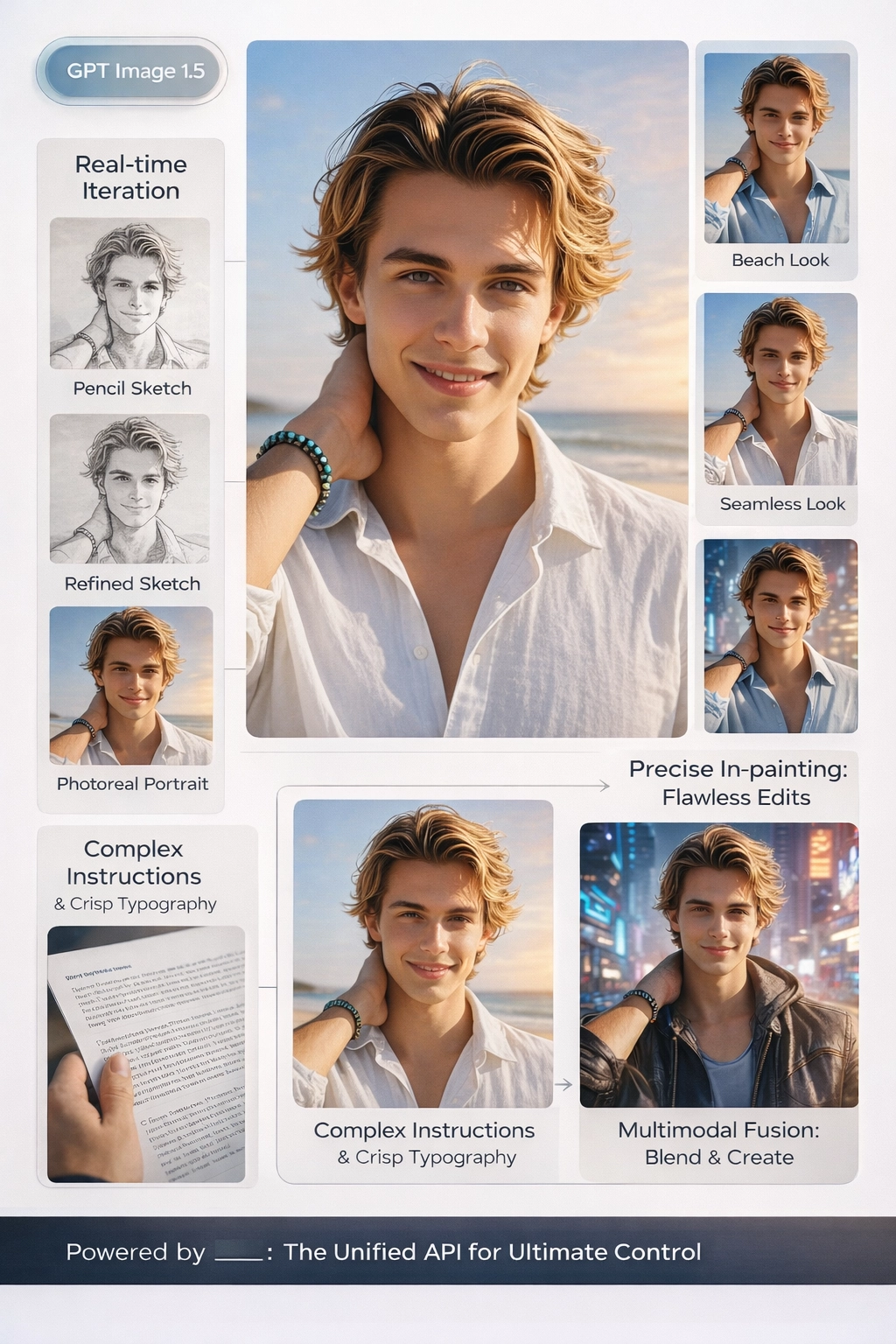

gpt-image-1.5). The release highlights stronger instruction-following, more precise editing, improved dense text rendering, and image generation speeds up to 4× faster compared with prior iterations.Executive Summary: What GPT Image 1.5 Changes in Production

GPT Image 1.5 is positioned as OpenAI's most capable general-purpose text-to-image model at launch, with emphasis on:

- Instruction following: more reliable changes "down to small details."

- Editing & preservation: better at applying edits while keeping key elements consistent (including facial likeness and branded visuals across edits).

- Text rendering: improved ability to render dense text within images.

- Speed: generation speeds up to 4× faster (reported by OpenAI).

Competitive Landscape: GPT Image 1.5 vs Nano Banana Pro vs FLUX

| Category | GPT Image 1.5 (OpenAI) | Nano Banana Pro (Google DeepMind) | FLUX Family (Black Forest Labs) |

|---|---|---|---|

| Positioning | General-purpose image generation + strong editing & instruction adherence | Built on Gemini 3; focuses on "studio-quality precision/control" and clear text | Text-to-image + editing variants (e.g., Kontext / Fill); options for API usage and self-hosting |

| Text in images | Improved dense text rendering | "Generate clear text" for posters/diagrams | Varies by model and workflow; strong editing-focused lineup |

| Editing & preservation | Emphasis on precise edits preserving important details across edits | Emphasis on precision/control for edits | Strong editing catalog (Kontext / Fill etc.) |

Production Performance: Latency Patterns and Reliability

Common latency drivers across image models:

- Resolution & aspect ratio (larger outputs take longer)

- Prompt complexity and iterative edits

- Traffic spikes / queueing

- Retry loops after safety rejections or transient failures

- Use timeouts + idempotency keys (or your own request IDs)

- Add async job queues for long-running generations

- Implement graceful fallbacks (lower quality, smaller size, or alternative model)

Safety Filters: Plan for Rejections as a First-Class Outcome

OpenAI's image APIs enforce safety policies; prompts or edits may be rejected. In production you should treat "rejected" as a normal outcome:

- Show actionable UI feedback to users

- Log rejection categories (when available)

- Provide safe re-prompt suggestions

- Avoid retry storms (rate-limit retries)

Pricing: Official GPT Image 1.5 Costs (Per Image + Tokens)

OpenAI publishes both:

- Per-image prices by quality and size

- Image token prices (for image inputs/outputs in token accounting)

Per-image prices (official)

| Quality | 1024×1024 | 1024×1536 | 1536×1024 |

|---|---|---|---|

| Low | $0.009 | $0.013 | $0.013 |

| Medium | $0.034 | $0.05 | $0.05 |

| High | $0.133 | $0.2 | $0.2 |

Image token prices (official)

gpt-image-1.5: image tokens Input $8 / Output $32 per 1M tokensgpt-image-1: image tokens Input $10 / Output $40 per 1M tokens

Developer Experience: What You Should Architect Around

Even when the model is strong, shipping a reliable product requires engineering for:

- Rate limits & backpressure (plan for 429s and queue requests)

- Schema drift across providers (different parameters, error codes, response formats)

- Observability (per-request cost, latency percentiles, failure reasons, fallback rates)

The EvoLink Angle: Unified API Patterns

A unified gateway approach can reduce operational burden by:

- Standardizing request/response formats across vendors

- Adding routing rules (e.g., choose GPT Image 1.5 for text-heavy posters; choose another model for photoreal scenes when acceptable)

- Implementing fallback strategies when a provider rejects or errors

- Providing centralized usage analytics for cost and performance tracking

Quick Start: GPT Image 1.5 via EvoLink

EvoLink provides a unified endpoint for GPT Image 1.5 that supports text-to-image, image-to-image, and image editing modes with asynchronous processing.

POST https://api.evolink.ai/v1/images/generations| Parameter | Type | Required | Description |

|---|---|---|---|

| model | string | Yes | Use gpt-image-1.5-lite |

| prompt | string | Yes | Image description, max 2000 tokens |

| size | enum | No | 1:1, 3:4, 4:3, 1024x1024, 1024x1536, 1536x1024 |

| quality | enum | No | low, medium, high, auto (default) |

| image_urls | array | No | 1-16 reference images for editing, max 50MB each |

| n | integer | No | Number of images (currently supports 1) |

Example: Text-to-Image

curl --request POST \

--url https://api.evolink.ai/v1/images/generations \

--header 'Authorization: Bearer YOUR_API_KEY' \

--header 'Content-Type: application/json' \

--data '{

"model": "gpt-image-1.5-lite",

"prompt": "A professional product photo of a sleek smartwatch on a marble surface, soft studio lighting, 4K quality",

"size": "1024x1024",

"quality": "high"

}'Example: Image Editing

curl --request POST \

--url https://api.evolink.ai/v1/images/generations \

--header 'Authorization: Bearer YOUR_API_KEY' \

--header 'Content-Type: application/json' \

--data '{

"model": "gpt-image-1.5-lite",

"prompt": "Change the background to a sunset beach scene, keep the product unchanged",

"image_urls": ["https://your-cdn.example.com/product-photo.jpg"],

"size": "1024x1024",

"quality": "high"

}'Response format

The API returns an async task. Poll the task status using the returned ID:

{

"created": 1757156493,

"id": "task-unified-1757156493-imcg5zqt",

"model": "gpt-image-1.5-lite",

"status": "pending",

"progress": 0,

"task_info": {

"can_cancel": true,

"estimated_time": 100

},

"usage": {

"credits_reserved": 2.5

}

}Note: Generated images expire after 24 hours. Download and store them promptly.

Conclusion

gpt-image-1.5) is a major 2025 step for production image workflows, with OpenAI explicitly emphasizing better instruction following, more precise edits that preserve important details, improved text rendering, and up to 4× faster generation.To ship reliably at scale, treat images as an infra problem: measure latency distributions, budget with official per-image pricing, handle safety rejections gracefully, and design routing/fallback patterns that protect user experience and unit economics.