Key Takeaway: Alibaba Cloud's Qwen team has released Qwen3-Max-Thinking, a trillion-parameter flagship model that goes head-to-head with GPT-5.2 and Claude Opus 4.5. evolink.ai will soon provide stable, cost-effective official API access.

Qwen3-Max-Thinking at a Glance

Technical Specs

- Total Parameters: Over 1 trillion (1T)

- Pre-training Data: 36T tokens

- Model Variants: Base, Instruct, and Thinking editions

- Global Standing: Among the world's largest AI models

- Access Options: Qwen App (desktop/web) + API calls

- Official Website: https://chat.qwen.ai/

Benchmark Performance Showdown

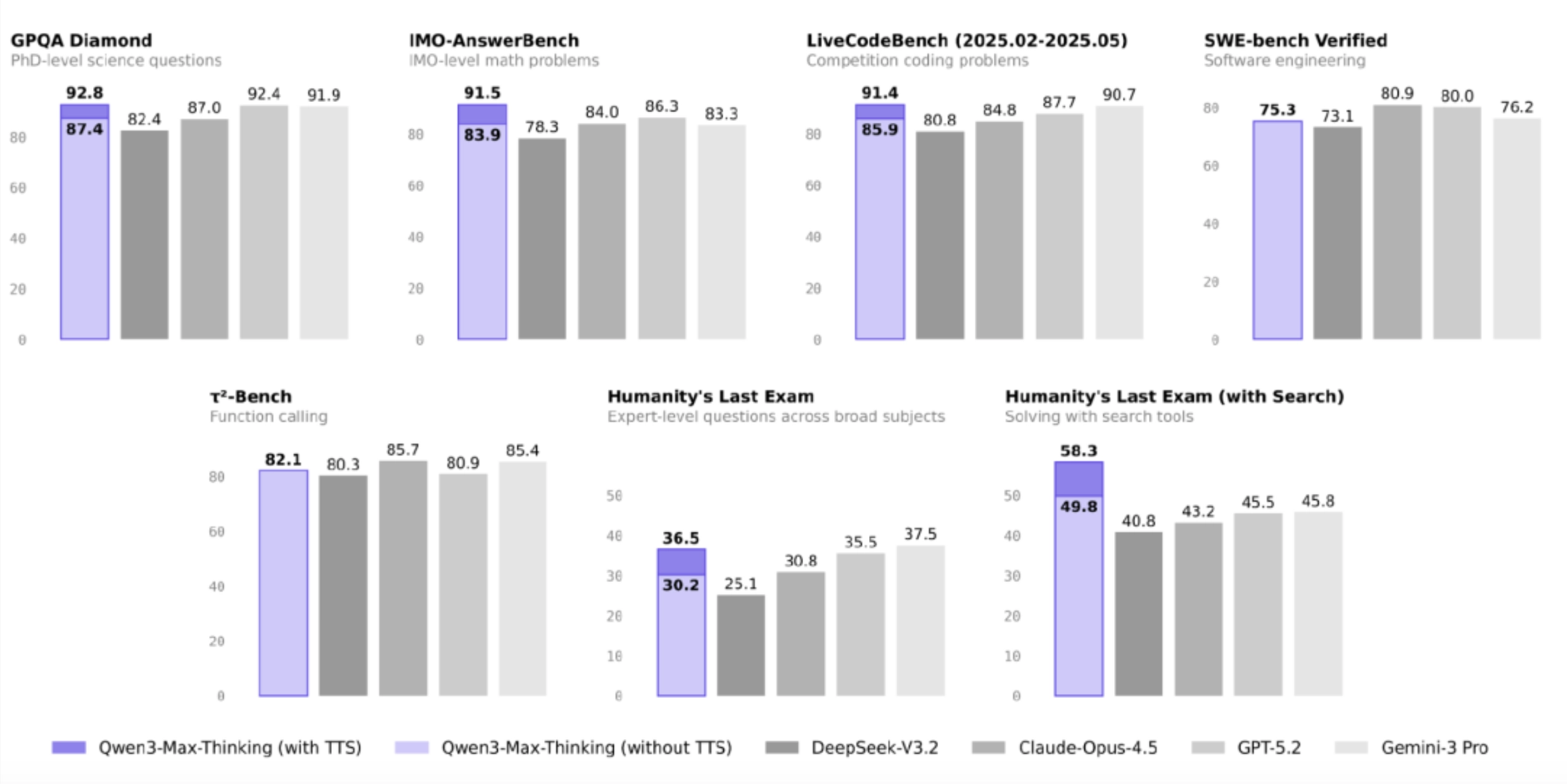

Qwen3-Max-Thinking matches or outperforms leading closed-source models (GPT-5.2-Thinking, Claude-Opus-4.5, Gemini-3 Pro) across major AI benchmarks:

| Benchmark | Qwen3-Max-Thinking | Compared To | Domain |

|---|---|---|---|

| GPQA Diamond | SOTA-level | GPT-5.2 / Claude | Scientific Q&A |

| IMO Math Test | 91.5 | Gemini-3 Pro | Mathematical Reasoning |

| LiveCodeBench v6 | 91.4 | Claude Opus 4.5 | Code Generation |

| HLE (with tools) | 58.3 | GPT-5.2 | Advanced Reasoning + Tool Use |

Coding Powerhouse

When it comes to code generation and programming tasks, Qwen3-Max-Thinking truly shines. Its 91.4 score on LiveCodeBench puts it in the same league as Gemini and Claude—a game-changer for developers.

Two Breakthrough Innovations

1. Adaptive Tool Calling

| Traditional Approach | Qwen3-Max-Thinking |

|---|---|

| Users manually select tools | Model autonomously decides and invokes |

| Single-function execution | Search + memory + code interpreter in sync |

| Prone to hallucinations | Real-time verification reduces errors |

- ✅ Smart Search: Automatically fetches up-to-date information

- ✅ Memory System: Personalized context understanding

- ✅ Code Interpreter: Executes code snippets on the fly

- ✅ Hallucination Mitigation: Multi-source validation for accuracy

2. Test-Time Scaling

- GPQA: 90.3 → 92.8 (+2.5)

- HLE: 34.1 → 36.5 (+2.4)

- LiveCodeBench v6: 88.0 → 91.4 (+3.4)

- IMO-AnswerBench: 89.5 → 91.5 (+2.0)

- HLE (with tools): 55.8 → 58.3 (+2.5)

With the same token budget, this approach consistently outperforms standard parallel sampling, dramatically boosting performance on complex tasks.

Why Choose evolink.ai

Your Gateway to Qwen3-Max-Thinking

🏆 Enterprise-Grade Reliability

- 99.9% Uptime Guarantee: Rock-solid 24/7 availability

- Smart Load Balancing: No hiccups during peak hours

- Disaster Recovery: Multi-node deployment with automatic failover

💰 Unbeatable Pricing

- Pay-As-You-Go: Only pay for the tokens you actually use

- Crystal-Clear Billing: Real-time usage and cost tracking

- Volume Discounts: Tiered pricing for enterprise customers

🤝 Official Partnership

- Direct Alibaba Cloud Connection: First-party API resources

- SLA-Backed Quality: Service level agreements you can count on

- Early Access: Be the first to get new features

- Dedicated Support: 24/7 technical assistance

🚀 Who It's For

| User Type | Recommended Plan | Key Benefits |

|---|---|---|

| Individual Developers | Pay-As-You-Go | Low-risk experimentation, flexible usage |

| Startups | Standard Package | Predictable costs, rapid integration |

| Enterprises | Custom Solutions | Dedicated deployment, data sovereignty |

What You'll Get

- ✅ Trillion-Parameter Reasoning - Tackle complex business logic and multi-step workflows

- ✅ Top-Tier Code Generation - Support for 50+ programming languages, 3x productivity boost

- ✅ Math & Logic Mastery - Solve financial modeling, scientific computing, and beyond

- ✅ Adaptive Tool Selection - Automatically picks the optimal toolchain for each task

- ✅ Real-Time Information - Web search integration keeps answers fresh and accurate

A Landmark Moment for Chinese AI

Joining the Global Elite

- Parameter Scale: Trillion-level, on par with GPT-5

- Real-World Performance: Beats international leaders on multiple benchmarks

- Technical Innovation: Pioneering adaptive tool calling and test-time scaling

- Iteration Speed: International developers note "they're shipping faster than OpenAI"

What Developers Are Saying

"Qwen consistently outpaces the competition." — International Developer

"This is exactly the Qwen release I've been waiting for." — Harriett Solid

"Qwen's update cadence and capability disclosures have surpassed OpenAI." — Tech Community Member

FAQ

Q1: When will Qwen3-Max-Thinking be available on evolink.ai?

Q2: Are your prices better than the official API?

- Bulk purchase discounts

- Zero hidden fees

- Better value than direct API calls

Q3: What integration methods do you support?

- RESTful API

- Python SDK

- JavaScript SDK

- Web-based interface

Q4: How do you handle data security?

- In Transit: TLS 1.3 encryption

- At Rest: We don't store conversation data

- Compliance: GDPR-compliant, China Level 3 certified

- Privacy: Strict adherence to data protection regulations

Q5: How do I get started?

- Sign up at evolink.ai

- Grab your API key

- Follow our docs—you'll be up and running in 5 minutes

Try Qwen3-Max-Thinking Today

Official Channels

- Qwen App: https://chat.qwen.ai/

- API Documentation: https://qwen.ai/blog?id=qwen3-max-thinking

Further Reading

- AI Model Showdown: GPT-5 vs Claude Opus 4.5 vs Qwen3-Max

- How to Choose the Right AI API Provider

- Best Practices for LLM Application Development

About evolink.ai

evolink.ai is a leading AI integration platform, empowering developers and businesses with:

- 🌐 Multi-Model Access: GPT, Claude, Gemini, Qwen, and more

- 💼 Enterprise-Ready: Stable, secure, and compliant

- 💰 Best-in-Class Value: Official channels, transparent pricing

- 🛠️ Developer-First: Comprehensive docs, quick integration

- Alibaba Qwen Official Blog: https://qwen.ai/blog?id=qwen3-max-thinking

- Qwen Chat: https://chat.qwen.ai/

- AI Frontier: Alibaba Breaks the Trillion-Parameter Barrier with Qwen3-Max-Thinking