The arrival of GPT-5.2 in December 2025 marks a significant paradigm shift in AI development. We're moving beyond models that just generate plausible text to systems capable of reliable reasoning. For engineers and CTOs, this isn't just an incremental upgrade; it's a fundamental change in how we can architect and deploy mission-critical applications. GPT-5.2 isn't just "smarter"—it's optimized for the high-concurrency, complex workflows that define modern enterprise software.

Key Takeaways

- Advanced Reasoning: GPT-5.2 demonstrates a significant leap in "System 2" logical reasoning, reducing hallucinations and enabling more complex problem-solving in a single pass.

- Production-Ready?: While immensely powerful, the model introduces a critical tradeoff between intelligence, latency, and cost. It's not a universal replacement for GPT-4o.

- Agentic Capabilities: Vastly improved function calling and JSON mode adherence make it a superior choice for building reliable autonomous agents and structured data extraction pipelines.

- Integration & Cost: Direct access is limited and costly. A unified API layer like EvoLink is essential for managing costs, ensuring reliability with model fallbacks, and simplifying integration.

What GPT-5.2 Is: A Look at the Architecture

GPT-5.2 represents a major architectural evolution. While OpenAI remains tight-lipped about the exact implementation, the performance gains point to key advancements:

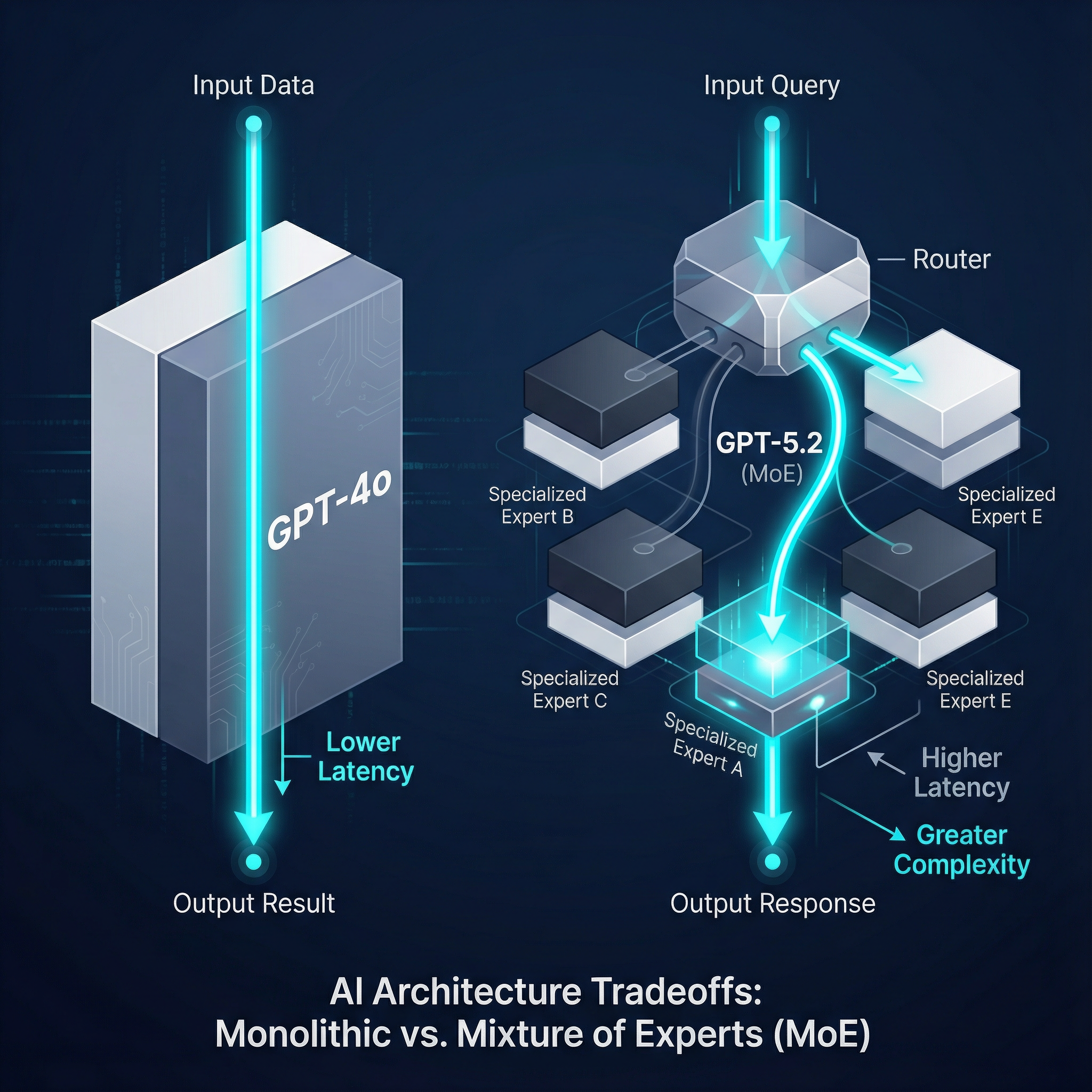

- Architecture: Widely believed to be a sophisticated Mixture of Experts (MoE) model. Unlike its predecessors, it likely routes queries to specialized sub-networks, improving efficiency and capability on domain-specific tasks (e.g., coding vs. creative writing).

- Context Window: Expanded to a robust 400K tokens, enabling deeper analysis of large documents, codebases, or complex conversational histories.

- Multimodality: GPT-5.2 is natively multimodal, processing text, images, and audio inputs with a more unified understanding. This allows it to interpret complex data visualizations, UIs, and audio cues instantly, without chaining separate models.

- Reasoning Tokens: Speculation points to a new mechanism, possibly "reasoning tokens," that allows the model to perform more explicit "System 2" thinking before generating a final answer, improving its performance on complex logical and mathematical problems.

Why GPT-5.2 Matters for Production Systems

For those building real-world products, the value of a new model is measured in reliability and performance, not just benchmark scores.

1. Reliability

The most significant advancement is the dramatic reduction in hallucination rates. For mission-critical applications in legal, medical, or financial analysis, this enhanced reliability moves the needle from "experimental" to "dependable."

2. Reasoning Depth

Where GPT-4 often required complex prompt chains to deconstruct a problem, GPT-5.2 can handle multi-step logic in a single inference. This simplifies application architecture and reduces the points of failure.

3. Agentic Capability

Function calling and JSON mode are now "rock-solid," according to early developer feedback. The model's ability to reliably adhere to structured data formats makes it the new gold standard for powering autonomous agents and predictable API-driven workflows.

The Tradeoff

This leap in intelligence comes at a cost. GPT-5.2 has higher latency and a higher price per token than its predecessors. The core engineering challenge is no longer "Is the model smart enough?" but "Is the added intelligence worth the latency and cost for this specific use case?"

Unlock GPT-5.2 for Your Production Environment

Tired of waitlists and unpredictable costs? Get immediate, scalable access to the GPT-5.2 API without the friction. EvoLink provides a unified API with wholesale volume pricing and enterprise-grade reliability.

Core Capabilities & Strengths

GPT-5.2's strengths are most apparent in tasks requiring deep expertise and precision.

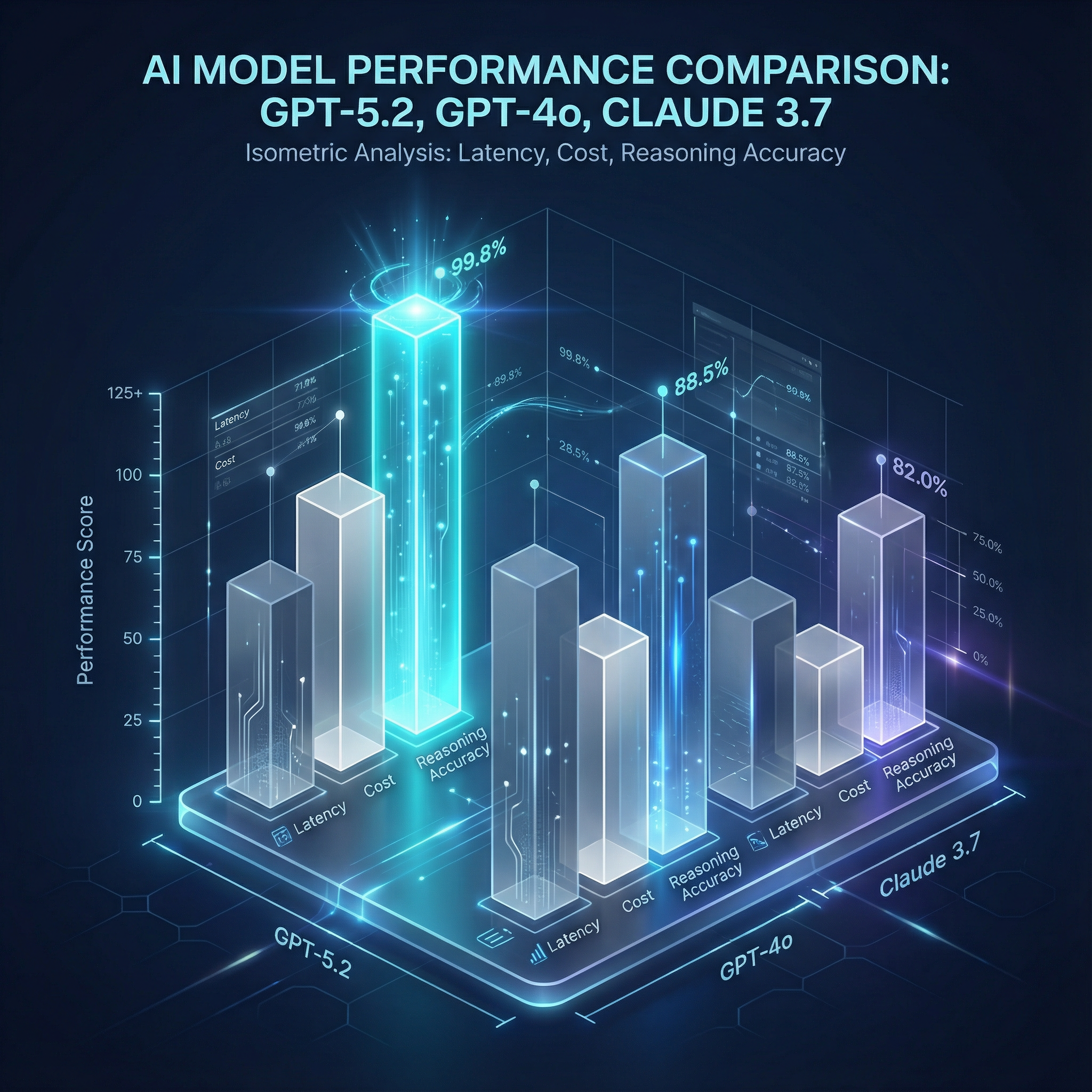

- Advanced Reasoning: Outperforms GPT-4o and Claude 3.7 on key benchmarks like MATH and GSM8K, demonstrating its ability to solve graduate-level mathematical and logical problems.

- Coding Proficiency: Shows significant improvements on HumanEval and SWE-bench. It can not only generate code but also understand and debug complex repositories, making it a powerful pair programmer.

- Multimodal Fluidity: Can instantly analyze financial charts, explain user interface screenshots to an automation script, or transcribe and summarize audio—all through a single API call.

- Long-Context Recall: Excels at "Needle in a Haystack" tests, accurately recalling specific facts buried deep within its 400K token context window. This is critical for RAG systems analyzing dense research papers or legal documents.

Benchmarks & Tradeoffs (The "Real" Numbers)

While benchmarks tell part of the story, production metrics matter more. Here's a pragmatic comparison based on early data and community reports.

| Model | Input Cost ($/1M tokens) | Output Cost ($/1M tokens) | Context Window |

|---|---|---|---|

| GPT-5.2 | $1.75 | $14.00 | 400K |

| GPT-4o | $1.25 | $10.00 | 128K |

| Claude 3.7 | $1.50 | $12.00 | 200K |

| Llama 4 (Open) | $0.50 | $4.00 | 100K |

Key Metrics

- Latency (TTFT): GPT-5.2's Time To First Token is noticeably higher than GPT-4o's. For real-time conversational chatbots, this can degrade the user experience. It's better suited for asynchronous tasks where a few seconds of processing time is acceptable.

- Cost per Token: At $1.75 (input) and $14.00 (output) per million tokens, it's the premium option. A complex task that is cheaper on GPT-5.2 (due to fewer retries) might still cost more in absolute terms than a chained-prompt approach on GPT-4o.

- Throughput (TPS): Official providers often impose strict rate limits ("Tier 5" access), making it difficult to scale. Production systems require a solution that can handle high tokens-per-second (TPS) and manage concurrency, a core benefit of using an API gateway like EvoLink.

Developer Sentiment & Community Insights

The engineering community's reaction has been pragmatic and insightful, cutting through the hype.

Praise

"Finally solves complex logic tasks with fewer hallucinations. We replaced a 5-step prompt chain with a single call to GPT-5.2."

"JSON mode is rock-solid for API responses. We're seeing 99.9% compliance, which was unheard of with previous models."

Complaints

"Higher latency for smarter outputs. It's a tough sell for our interactive features."

"Migrating was smooth, but the cost-per-token adds up fast. You have to be very deliberate about which tasks you offload to it."

A recurring theme on developer forums is the "cost vs. capability" calculation. One Reddit user noted:

"EvoLink's fallback feature saved us during peak loads. We route simple queries to 4o and only use 5.2 for the heavy lifting. It's the only way to make the economics work."

Pricing & Cost Efficiency

Running GPT-5.2 at scale is a significant financial commitment. The "Tier 5" access problem through official providers means many businesses hit a wall due to strict rate limits and waitlists. Furthermore, managing billing across multiple models and providers creates unnecessary operational overhead.

This is where an API infrastructure layer becomes critical. EvoLink addresses these challenges directly:

- Wholesale Volume Pricing: By aggregating demand, EvoLink offers access to models like GPT-5.2 at volume-discounted rates that are typically unavailable to individual companies.

- Unified Billing: Consolidate spending across GPT-5.2, GPT-4o, Claude, and other models into a single invoice. This simplifies cost tracking and budget management for your entire AI stack.

- Smart Routing & Fallbacks: Don't pay premium prices for simple tasks. Use EvoLink to dynamically route requests to the most cost-effective model that can handle the job, with automatic fallbacks to ensure uptime.

How to Integrate GPT-5.2 via API

base_url to the EvoLink endpoint. This single change unlocks model-agnostic routing, fallbacks, and cost optimization without altering your core application logic.Here is a clean Python snippet demonstrating a streaming call to GPT-5.2 through the EvoLink API gateway.

import requests

url = "https://api.evolink.ai/v1/chat/completions"

payload = {

"model": "gpt-5.2",

"messages": [

{

"role": "user",

"content": "Please introduce yourself"

}

],

"temperature": 1,

"stream": False,

"top_p": 1,

"frequency_penalty": 0,

"presence_penalty": 0

}

headers = {

"Authorization": "Bearer <token>",

"Content-Type": "application/json"

}

response = requests.post(url, json=payload, headers=headers)

print(response.text)Migration Checklist: Is Your App Ready for GPT-5.2?

- Identify High-Value Use Cases: Pinpoint tasks where deep reasoning and low hallucination are critical (e.g., legal contract analysis, complex code generation).

- Assess Latency Tolerance: Can your user experience tolerate a slightly longer response time for a much smarter answer?

- Implement a Router/Gateway: Use a service like EvoLink to avoid vendor lock-in and enable dynamic switching between GPT-5.2 and more economical models like GPT-4o.

- Rewrite Critical Prompts: While many prompts work out-of-the-box, fine-tune your most important system prompts to leverage GPT-5.2's advanced reasoning capabilities.

- Monitor Costs Closely: Set up dashboards to track token consumption. The cost of GPT-5.2 can escalate quickly if used for every single query.

Use Cases & Decision Guide

Choosing the right model is a critical architectural decision.

When to use GPT-5.2

- Autonomous Agents: When building agents that need to perform multi-step tasks with high reliability and use tools (function calls) correctly every time.

- Complex RAG: For question-answering systems that must synthesize information from multiple dense, technical documents with high fidelity.

- Advanced Coding Assistants: For tools that need to understand entire codebases, generate complex logic, and identify subtle bugs.

- Legal & Medical Analysis: In domains where precision is non-negotiable and hallucinations are unacceptable.

When to stay on GPT-4o / Mini

- High-Volume Classifiers: For simple text classification, sentiment analysis, or data extraction where speed and low cost are paramount.

- Simple Chatbots: When the goal is conversational flow and quick responses rather than deep problem-solving.

- Latency-Critical Flows: For real-time applications like live transcription or interactive search suggestions where every millisecond counts.

Conclusion: The Strategic Upgrade Path

GPT-5.2 is more than just a powerful new model; it's a specialized tool for high-stakes reasoning tasks. A blanket upgrade of all your AI workflows to GPT-5.2 is not just impractical due to cost and latency—it's poor engineering.

The future of production AI is not about finding the one "best" model, but about building a flexible, intelligent, and cost-aware system.

Frequently Asked Questions (FAQ)

1. How does GPT-5.2's pricing compare to GPT-4o?

GPT-5.2 is significantly more expensive per token. On average, you can expect input costs to be ~40% higher and output costs to be ~40% higher than GPT-4o. This makes cost-management strategies essential.

2. Is prompt engineering different for GPT-5.2?

While many prompts will work as-is, you may not be leveraging its full power. Prompts can be simplified, with less need for "chain-of-thought" or few-shot examples, as the model's inherent reasoning is stronger.

3. How reliable is GPT-5.2's JSON mode?

Extremely reliable. Developer feedback indicates it's one of the model's standout features, making it perfect for structured data extraction and building dependable agentic workflows.

4. What are the main benefits of using EvoLink for GPT-5.2?

EvoLink provides a unified API to access GPT-5.2 alongside other models, consolidated billing, volume pricing discounts, smart routing to optimize cost, and fallback capabilities to improve reliability.

5. How does GPT-5.2's context window compare to Claude 3.7?

GPT-5.2 features a 400K token context window, which is double the 200K context window of Claude 3.7. This allows it to process and analyze much larger volumes of information in a single pass.