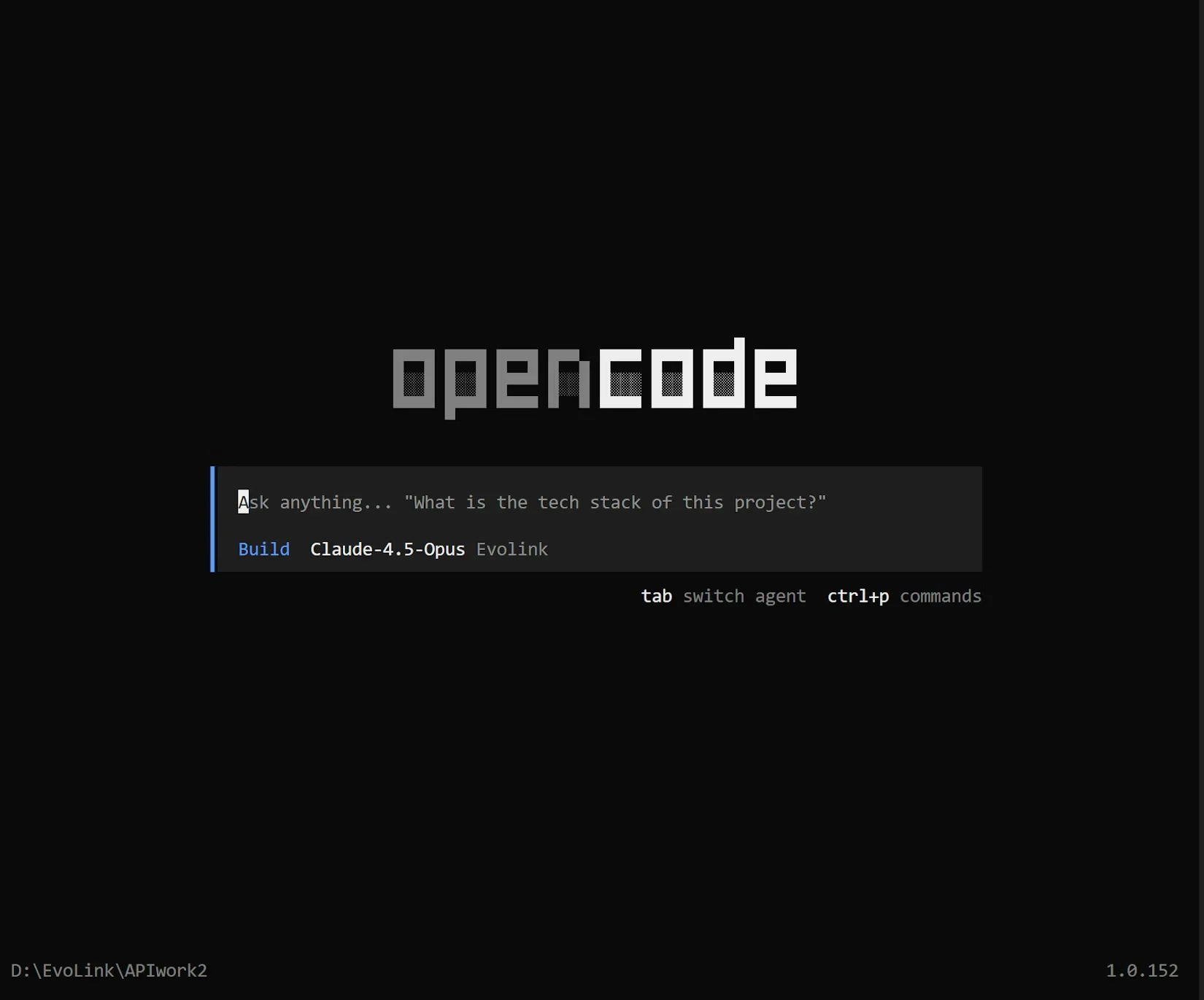

OpenCode Integration Guide: How to Access Claude 4.5, GPT-5.2 & Gemini 3 Pro Through EvoLink API (2026)

Introduction: The New Era of Terminal-Based AI

In the rapidly evolving landscape of 2026, the developer's terminal has transformed from a simple command line into a sophisticated command center for Artificial Intelligence. The days of context-switching between your IDE, a browser-based chatbot, and API documentation are over. Today, the most efficient developers are integrating AI agents directly into their CLI workflows.

Part 1: The Components of Your AI Stack

What is OpenCode?

-

Chat with your codebase using natural language.

-

Execute terminal commands autonomously (with permission).

-

Edit files across your project structure.

-

Debug errors by reading stack traces directly from the output.

What is EvoLink?

-

Unified Access: One API key gives you access to OpenAI, Anthropic, Google, Alibaba, and ByteDance models.

-

Cost Efficiency: Through Smart Routing, EvoLink automatically routes requests to the most cost-effective provider for a specific model, offering savings of 20-70% compared to direct provider usage.

-

Reliability: With an asynchronous task architecture and automatic failover, EvoLink guarantees 99.9% uptime, ensuring your coding agent never "hangs" during a critical debug session.

Part 2: Why Integrate OpenCode with EvoLink?

The integration of OpenCode and EvoLink represents the "Skyscraper Principle" of software development—building on strong foundations to reach new heights.

-

Model Agility: You can switch from using Claude 4.5 Opus for writing complex classes to Gemini 3 Pro for analyzing a 500-page documentation PDF without changing your configuration or API keys.

-

Zero-Code Migration: EvoLink is fully compatible with the OpenAI API format. This means OpenCode "thinks" it is talking to a standard provider, while EvoLink handles the complex routing in the background.

-

High-Density Information Flow: By connecting OpenCode's ability to read local files with EvoLink's access to high-context models, you can feed entire repositories into the context window for analysis.

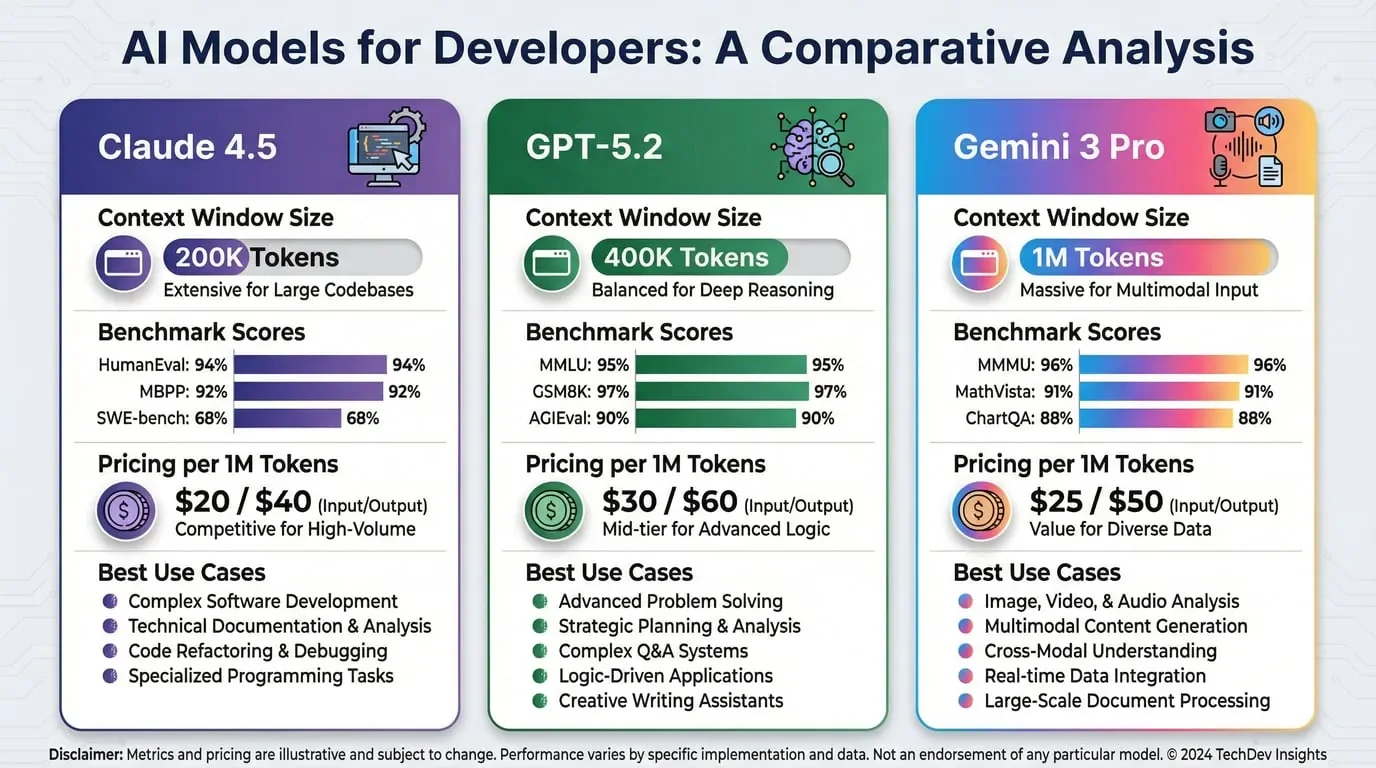

Part 3: Understanding the Three Powerhouse Models (2026 Edition)

1. Claude 4.5 (Sonnet & Opus) - The Coding Architect

-

Best For: Writing clean, maintainable code, refactoring, and architectural planning.

-

The Stats: Claude 4.5 Opus holds the crown on the SWE-bench Verified leaderboard with a score of 80.9%, meaning it solves real-world GitHub issues better than any other model.

-

Why use it in OpenCode: It produces the most "human-like" code structure and is less prone to hallucinating non-existent libraries. It excels at following complex, multi-step instructions.

2. GPT-5.2 - The Reasoning Engine

-

Best For: Complex logic, mathematical algorithms, and "thinking through" obscure bugs.

-

The Stats: GPT-5.2 achieves a perfect 100% on the AIME 2025 (math) benchmark and 52.9% on ARC-AGI-2, significantly outperforming competitors in abstract reasoning.

-

Why use it in OpenCode: When you are stuck on a logic error that defies explanation, or need to generate complex regular expressions or SQL queries, GPT-5.2 is the superior choice.

3. Gemini 3 Pro - The Context & Multimodal King

-

Best For: analyzing massive codebases, reading documentation images, and high-speed iteration.

-

The Stats: Features a massive 1 Million Token context window and industry-leading speed (approx. 180 tokens/second).

-

Why use it in OpenCode: Use Gemini 3 Pro when you need to feed your entire project directory into the prompt to check for global consistency. It is also the most cost-effective option for high-volume tasks.

| Feature | Claude 4.5 Opus | GPT-5.2 | Gemini 3 Pro |

|---|---|---|---|

| Primary Strength | Code Quality & Safety | Logic & Reasoning | Context & Speed |

| Context Window | 200k Tokens | 400k Tokens | 1 Million Tokens |

| SWE-bench Score | 80.9% (Leader) | 80.0% | 76.2% |

| Best For | Refactoring, New Features | Hard Debugging, Math | Documentation, Large Repos |

Part 4: Step-by-Step Integration Guide

This guide assumes you are working in a Unix-like environment (macOS/Linux) or WSL for Windows.

Prerequisites

-

Terminal Emulator: iTerm2 (macOS), Windows Terminal, or Hyper.

-

EvoLink Account: A valid account at evolink.ai.

-

Git: Installed on your machine.

Step 1: Install OpenCode

If you haven't installed OpenCode yet, run the following command in your terminal. This script automatically detects your OS and installs the necessary binaries.

curl -fsSL https://raw.githubusercontent.com/opencode-ai/opencode/main/install | bashopencode --versionStep 2: Get Your EvoLink API Key

-

Log in to your EvoLink Dashboard.

-

Navigate to the API Keys section.

-

Click Create New Key.

-

Copy the key string (starts with

sk-evo...). Do not share this key.

Step 2.5: Initialize OpenCode Provider

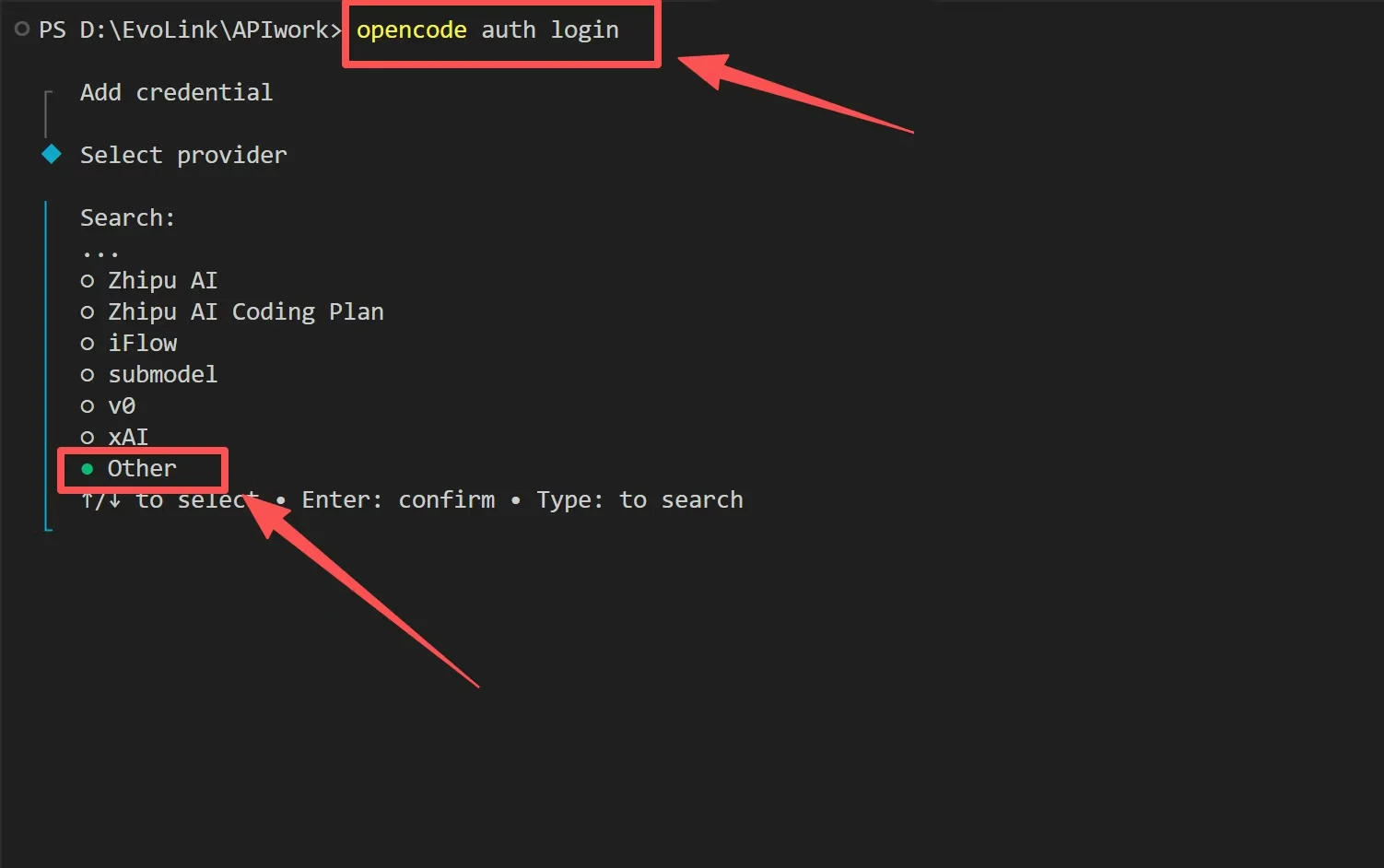

Before configuring the JSON file, you need to register EvoLink as a custom provider in OpenCode's credential manager. This is a one-time setup that allows OpenCode to recognize EvoLink as a valid provider.

- Launch OpenCode for the first time:

opencode- When OpenCode starts, it will prompt you to connect a provider. In the provider list, scroll down and select other (you can search for it by typing).

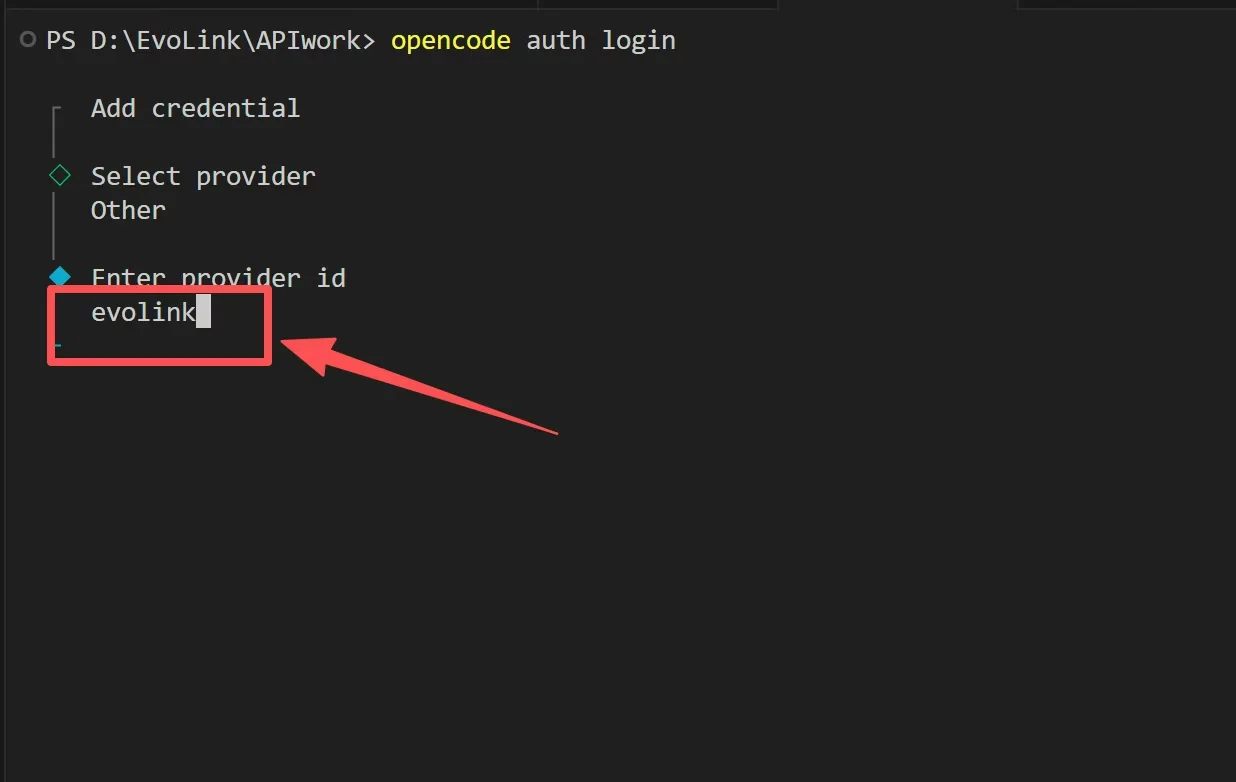

- Enter Provider ID: When prompted, type

evolinkas the provider identifier. This creates a custom provider entry in OpenCode's system.

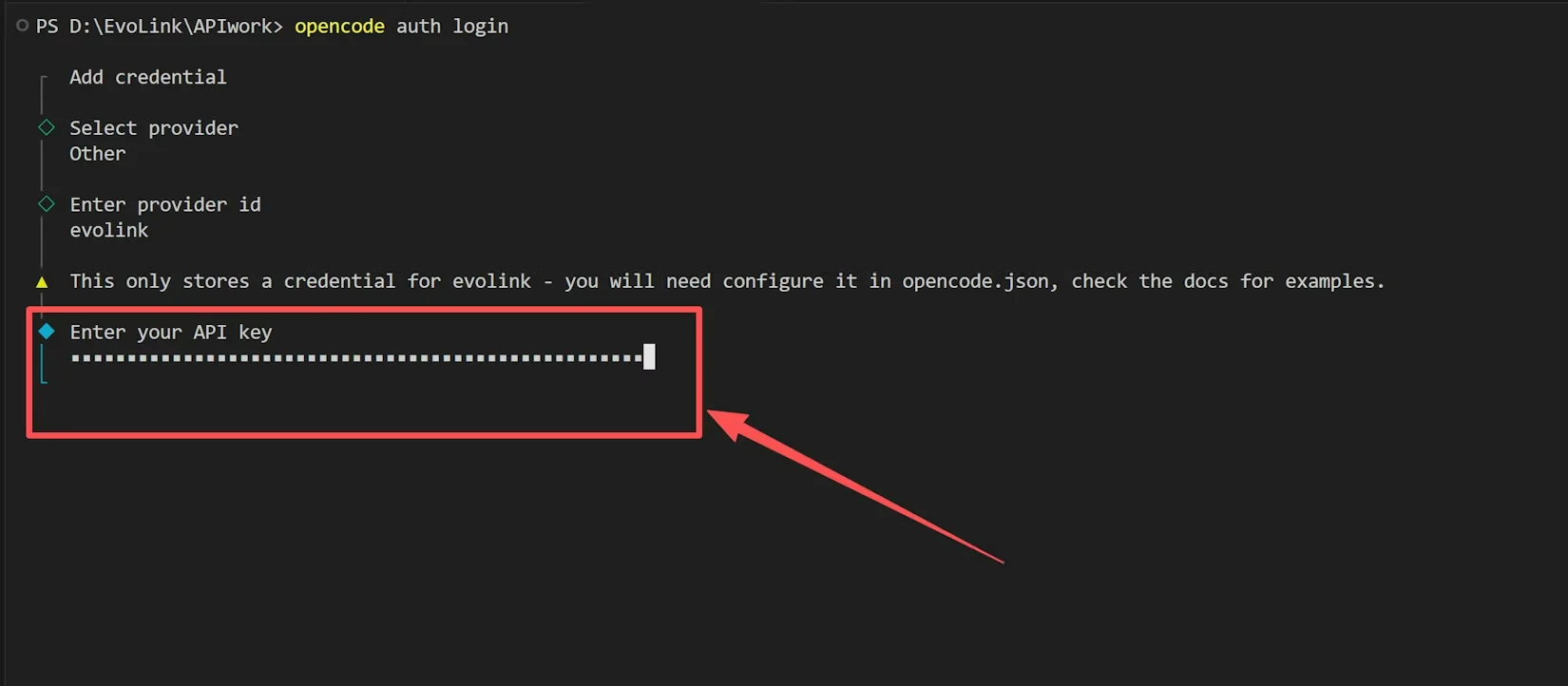

- Enter API Key: You can enter any placeholder value here (e.g.,

adminortemp). The actual EvoLink API key will be referenced via the configuration file in the next step.

evolink in OpenCode's local credential manager. The configuration file we'll create next will provide the actual connection details.Step 3: Configure OpenCode

-

Locate/Create Config Directory:

-

macOS/Linux:

~/.config/opencode/ -

Windows:

%AppData%\opencode\

For Windows users: PressWin + R, paste%AppData%\opencode\, and press Enter to open the directory:

image.png -

-

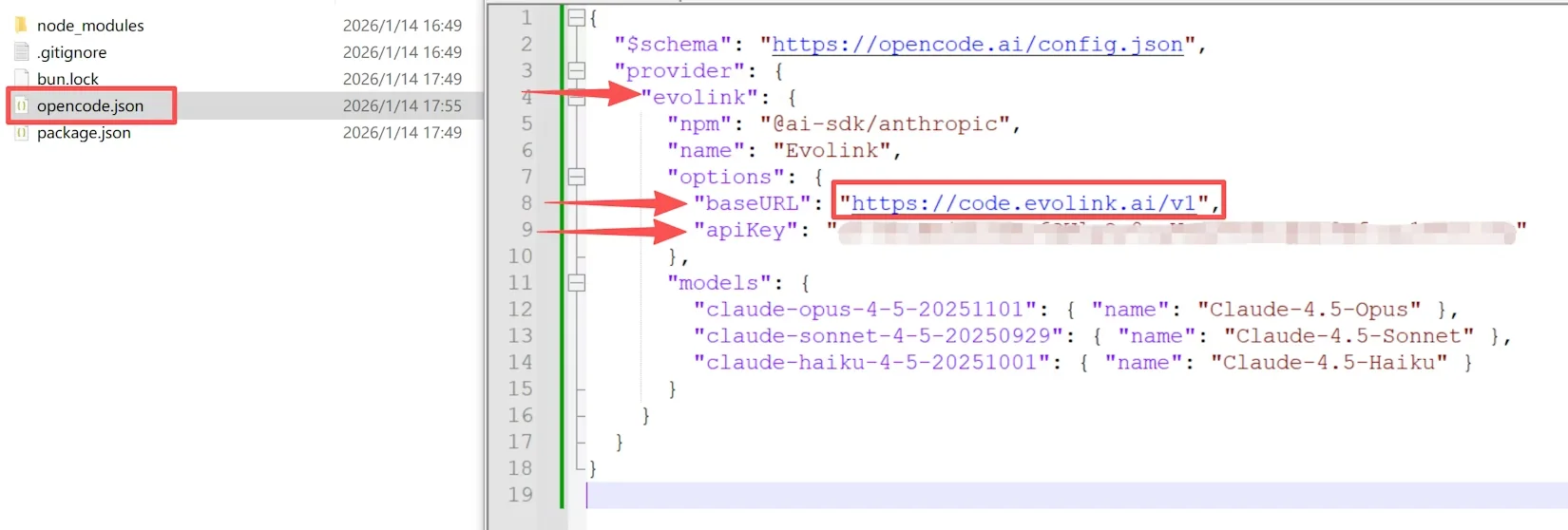

Create the

opencode.jsonfile:

mkdir -p ~/.config/opencode

nano ~/.config/opencode/opencode.json- Paste the following configuration:

Note: ReplaceYOUR_EVOLINK_API_KEYwith the key you generated in Step 2.

{

"$schema": "https://opencode.ai/config.json",

"provider": {

"evolink": {

"npm": "@ai-sdk/anthropic",

"name": "Evolink",

"options": {

"baseURL": "https://code.evolink.ai/v1",

"apiKey": "your-evolink-api-key"

},

"models": {

"claude-opus-4-5-20251101": {

"name": "Claude-4.5-Opus"

},

"claude-sonnet-4-5-20250929": {

"name": "Claude-4.5-Sonnet"

},

"claude-haiku-4-5-20251001": {

"name": "Claude-4.5-Haiku"

}

}

}

}

}provider to "openai" in the JSON. This is because EvoLink translates the OpenAI API format into the native formats of Anthropic and Google seamlessly. This "trick" allows OpenCode to communicate with non-GPT models using standard protocols.Step 4: Verify Connectivity

Launch OpenCode in your terminal:

opencodeIn the input box, type:

"Hello, which model are you and who is your provider?"

If configured correctly, the response should confirm the model you defined (e.g., "I am GPT-5.2...").

Part 5: Advanced Configuration & Model Switching

Once inside OpenCode, you are not locked into a single model. You can switch models dynamically based on the task at hand.

Switching Models via CLI

You can specify the model directly when launching the tool:

# For a quick logic check

opencode --model gpt-5.2

# For a heavy coding session

opencode --model claude-3-5-sonnet-20240620Switching Models via TUI

/models command to view available configurations.

-

Type

/modelsand press Enter. -

Select the model ID from your

opencode.jsonlist. -

Press Enter to switch context immediately.

Part 6: Best Practices for High-Density Development

To truly leverage the "Skyscraper" potential of this integration, follow these best practices:

1. The Context Strategy

-

When using Gemini 3 Pro: Feel free to run commands like

/add src/to add your entire source folder. Gemini's 1M context window can handle the load, allowing it to understand the full dependency graph of your project. -

When using GPT-5.2: Be more selective. Add only the relevant files (

/add src/utils/helper.ts) to ensure the reasoning engine focuses strictly on the logic at hand without distraction.

2. Intelligent Routing for Cost Control

- Configure a

gpt-4o-miniorgemini-3-flashentry in youropencode.jsonfor writing simple unit tests or comments. These models cost a fraction of the frontier models but are sufficient for basic tasks.

3. Security First

opencode.json file to a public repository. Add .config/opencode/ to your global .gitignore file.echo ".config/opencode/" >> ~/.gitignore_global

git config --global core.excludesfile ~/.gitignore_globalPart 7: Troubleshooting Common Issues

- Fix: Check your EvoLink API key. Ensure you copied the full string

sk-evo.... Also, verify you have positive credit balance in your EvoLink account.

- Fix: Ensure the

modelname in your JSON matches exactly with the model IDs supported by EvoLink (e.g.,gpt-4o,claude-3-opus-20240229). Check EvoLink's Model List for exact ID strings.

- Fix: While EvoLink is fast, network latency varies. Check if you are using a very large model (like Opus) for a simple query. Switch to

gpt-5.2orgemini-3-flashfor faster interactions.

Conclusion

-

Start coding with the future, today.