What Is the Hugging Face Inference API

At its core, the Hugging Face Inference API is a service that lets you run machine learning models hosted on the Hugging Face Hub through straightforward API calls. It completely abstracts away the complexities of model deployment, like GPU management, server configuration, and scaling. Instead of provisioning your own servers, you send data to a model's endpoint and get predictions back.

To give you a clearer picture, here's a quick breakdown of what the API brings to the table.

Hugging Face Inference API At a Glance

This table summarizes the key features and benefits of using the Hugging Face Inference API for various development needs.

| Feature | Description | Primary Benefit |

|---|---|---|

| Serverless Inference | Run models via API calls without managing any servers, GPUs, or underlying infrastructure. | Zero Infrastructure Overhead: Frees up engineering time to focus on building features. |

| Vast Model Hub Access | Instantly use any of the 1,000,000+ models available on the Hugging Face Hub for various tasks. | Unmatched Flexibility: Easily swap models to find the best one for your specific use case. |

| Simple HTTP Interface | Interact with complex AI models using standard, well-documented HTTP requests. | Rapid Prototyping: Build and test AI-powered proofs-of-concept in minutes, not weeks. |

| Pay-Per-Use Pricing | You only pay for the compute time you use, making it cost-effective for experimentation and smaller loads. | Cost Efficiency: Avoids the high fixed costs of maintaining dedicated ML infrastructure. |

Ultimately, the API is designed to get you from concept to a functional AI feature with as little friction as possible.

Core Benefits for Developers

The API is clearly built with developer efficiency in mind, offering a few key advantages that make it a go-to for many projects.

- Zero Infrastructure Management: Forget provisioning GPUs, wrestling with CUDA drivers, or worrying about scaling servers. The API handles all that backend heavy lifting.

- Massive Model Selection: With direct access to the Hub, you can instantly switch between models for tasks like sentiment analysis, text generation, or image processing just by changing a parameter in your API call.

- Fast Prototyping: The sheer ease of use lets you build a proof-of-concept for an AI feature in a single afternoon.

Authenticating and Making Your First API Call

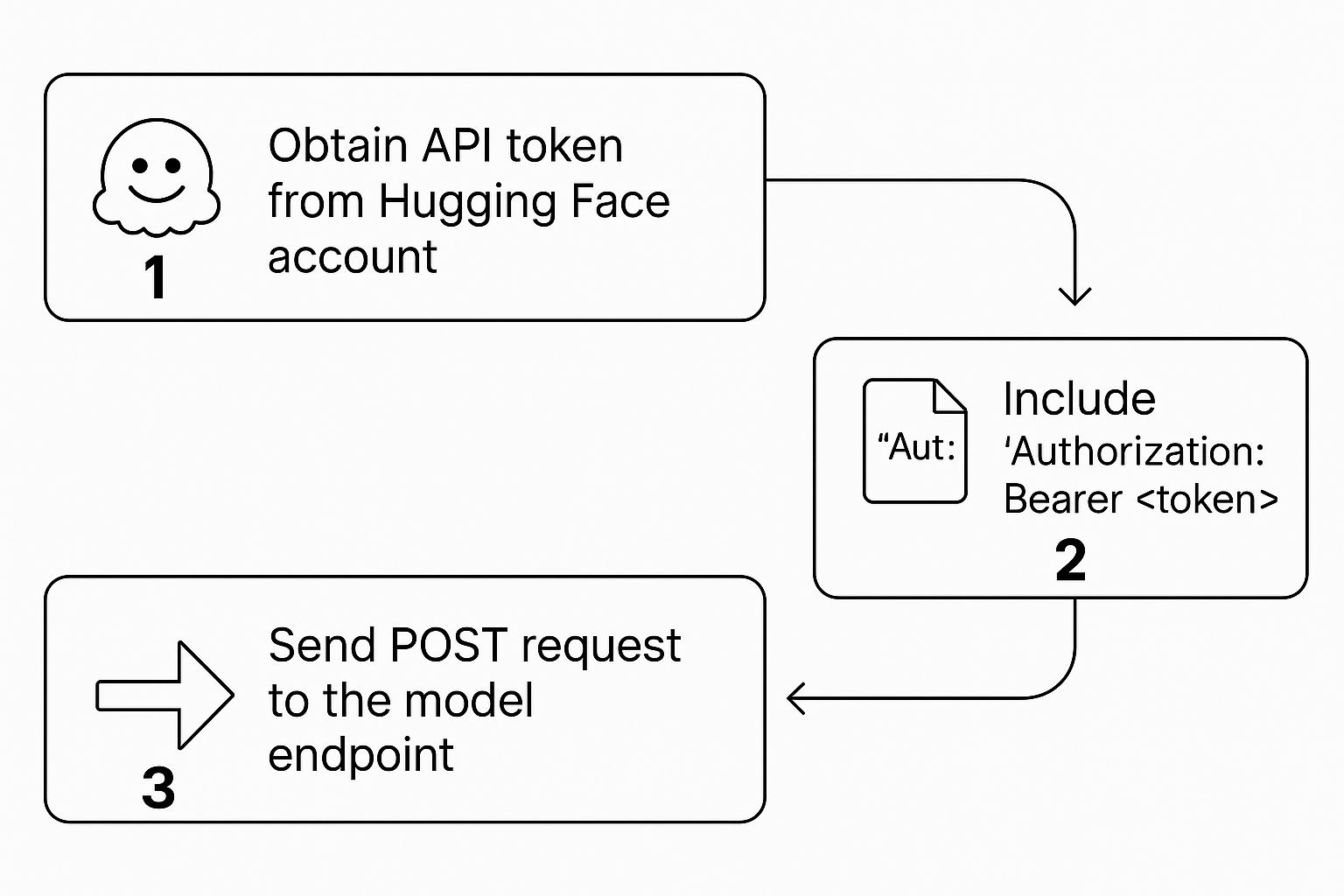

Authorization header of every request. This tells Hugging Face's servers that you are a legitimate user with permission to run the model you're calling. The process is a simple but crucial three-step dance: get the token, put it in the header, and make the call.

Once you've generated your token, it's all about structuring the request properly to ensure everything runs smoothly and securely.

Your First Python API Call

requests library. The key components are the model's specific API URL and a correctly formatted JSON payload with your input text. The Authorization header must use the "Bearer" scheme, which is standard for modern APIs. Simply prefix your token with Bearer —don't forget the space."YOUR_API_TOKEN" with your actual token from your Hugging Face account.import requests

import os

# Best practice: store your token in an environment variable

# For this example, we'll define it directly, but use os.getenv("HF_API_TOKEN") in production.

API_TOKEN = "YOUR_API_TOKEN"

API_URL = "https://api-inference.huggingface.co/models/distilbert/distilbert-base-uncased-finetuned-sst-2-english"

def query_model(payload):

headers = {"Authorization": f"Bearer {API_TOKEN}"}

response = requests.post(API_URL, headers=headers, json=payload)

response.raise_for_status() # Raise an exception for bad status codes

return response.json()

# Let's classify a sentence

data_payload = {

"inputs": "I love the new features in this software, it's amazing!"

}

try:

output = query_model(data_payload)

print(output)

# Expected output might look like: [[{'label': 'POSITIVE', 'score': 0.9998...}]]

except requests.exceptions.RequestException as e:

print(f"An error occurred: {e}")POSITIVE or NEGATIVE, along with a confidence score. This fundamental pattern applies to all sorts of tasks, from generating text to analyzing images; only the payload structure changes. Of course, when you get into more advanced models like video generators, the API interactions can get more complex, as you can see in this detailed Sora 2 API guide for 2025.Hardcoding your token is fine for a quick test, but it's a significant security risk in a real project. Never commit API keys to a Git repository. For anything beyond a simple script, use environment variables or a secrets management tool to keep your credentials safe.

Putting the Inference API to Work on Different AI Tasks

inputs for each model.Generating Creative Text

max_length to control the output.import requests

API_URL = "https://api-inference.huggingface.co/models/gpt2"

headers = {"Authorization": "Bearer YOUR_API_TOKEN"}

def query_text_generation(payload):

response = requests.post(API_URL, headers=headers, json=payload)

return response.json()

output = query_text_generation({

"inputs": "The future of AI in software development will be",

"parameters": {"max_length": 50, "temperature": 0.7}

})

print(output)

# Expected output: [{'generated_text': 'The future of AI in software development will be...'}]The response returns a clean JSON object with the generated text, making it easy to parse and integrate into your application.

Classifying Image Content

'rb') and pass that data in the data parameter of your request.import requests

API_URL = "https://api-inference.huggingface.co/models/google/vit-base-patch16-224"

headers = {"Authorization": "Bearer YOUR_API_TOKEN"}

def query_image_classification(filename):

with open(filename, "rb") as f:

data = f.read()

response = requests.post(API_URL, headers=headers, data=data)

return response.json()

# Make sure you have an image file (e.g., 'cat.jpg') in the same directory

try:

output = query_image_classification("cat.jpg")

print(output)

# Expected output: [{'score': 0.99..., 'label': 'Egyptian cat'}, {'score': 0.00..., 'label': 'tabby, tabby cat'}, ...]

except FileNotFoundError:

print("Error: 'cat.jpg' not found. Please provide a valid image file path.")Zero-Shot Text Classification

inputs (your text) and a parameters object containing a list of candidate_labels.// Example in JavaScript using fetch

async function queryZeroShot(data) {

const response = await fetch(

"https://api-inference.huggingface.co/models/facebook/bart-large-mnli",

{

headers: { Authorization: "Bearer YOUR_API_TOKEN" },

method: "POST",

body: JSON.stringify(data),

}

);

const result = await response.json();

return result;

}

queryZeroShot({

"inputs": "Our new feature launch was a massive success!",

"parameters": {"candidate_labels": ["marketing", "customer feedback", "technical issue"]}

}).then((response) => {

console.log(JSON.stringify(response));

// Expected output: {"sequence": "...", "labels": ["customer feedback", ...], "scores": [0.98..., ...]}

});Understanding Costs and Usage Tiers

The system is built around user tiers (Free, Pro, Team, Enterprise), each with a certain amount of monthly usage credits. Free users receive a small amount, while Pro and Team users get more. Once these credits are exhausted, you transition to a pay-as-you-go model, billed for inference requests and model runtime. While this is great for getting started, managing separate costs across multiple models and providers can quickly become a significant operational headache.

Simplifying Your Cost Management

From Direct Calls to Smart Routing

Optimizing for Production Performance

Building Resilience Beyond a Single Endpoint

This architecture delivers two critical benefits for any production system:

- Automatic Failover: If a primary provider is slow or unresponsive, EvoLink instantly reroutes the request to a healthy alternative, ensuring application stability.

- Load Balancing: During traffic spikes, requests are automatically distributed across multiple providers, preventing bottlenecks and keeping latency low.

By abstracting the provider infrastructure, you build resilience directly into your application.

From Direct Call to Unified Gateway

Here's a practical look at the difference in Python:

# Before: Direct API call to Hugging Face

# This creates a single point of failure.

import requests

HF_API_URL = "https://api-inference.huggingface.co/models/gpt2"

HF_TOKEN = "YOUR_HF_TOKEN"

def direct_hf_call(payload):

headers = {"Authorization": f"Bearer {HF_TOKEN}"}

response = requests.post(HF_API_URL, headers=headers, json=payload)

return response.json()# After: Calling the unified EvoLink API (OpenAI-compatible)

# Your application is now resilient with automatic failover and load balancing.

import requests

# EvoLink's unified API endpoint (OpenAI-compatible)

EVOLINK_API_URL = "https://api.evolink.ai/v1"

EVOLINK_TOKEN = "YOUR_EVOLINK_TOKEN"

def evolink_image_generation(prompt):

"""

Generate images using EvoLink's intelligent routing.

EvoLink automatically routes to the cheapest provider for your chosen model.

"""

headers = {"Authorization": f"Bearer {EVOLINK_TOKEN}"}

# Example: Using Seedream 4.0 for story-driven 4K image generation

payload = {

'model': 'doubao-seedream-4.0', # Or 'gpt-4o-image', 'nano-banana'

'prompt': prompt,

'size': '1024x1024'

}

response = requests.post(f"{EVOLINK_API_URL}/images/generations",

headers=headers, json=payload)

return response.json()

def evolink_video_generation(prompt):

"""

Generate videos using EvoLink's video models.

"""

headers = {"Authorization": f"Bearer {EVOLINK_TOKEN}"}

# Example: Using Sora 2 for 10-second video with audio

payload = {

'model': 'sora-2', # Or 'veo3-fast' for 8-second videos

'prompt': prompt,

'duration': 10

}

response = requests.post(f"{EVOLINK_API_URL}/videos/generations",

headers=headers, json=payload)

return response.json()With this simple change, you've effectively future-proofed your application against provider-specific issues while gaining access to production-grade image and video generation capabilities.

Common Questions and Practical Answers

How Should I Deal With Rate Limits?

Hitting a rate limit is a common issue. Your limit depends on your subscription tier, and exceeding it will cause your application to fail.

Several tactics can help:

- Batch Your Requests: Where supported, bundle multiple inputs into a single API call instead of sending hundreds of separate requests.

- Implement Exponential Backoff: When a request fails due to rate limiting, build a retry logic that waits progressively longer between attempts (e.g., 1s, 2s, 4s). This prevents spamming the API and gives it time to recover.

Can I Run My Private Models on the Inference API?

Authorization header. The critical detail is ensuring the account associated with the token has the necessary permissions to access the private model repository. Without proper permissions, you will receive an authentication error.What's the Best Practice for Managing Model Versions?

gpt2) defaults to the latest version on the main branch. This is fine for testing but can introduce breaking changes in production when a model author pushes an update. The professional approach is to pin your requests to a specific commit hash. Every model on the Hub has a Git-like commit history. Identify the exact version you've tested, grab its commit hash, and include that revision in your API call. This guarantees you are always using the same model version, ensuring consistent and predictable results.