GPT-5.2 is not a "swap the model string" upgrade. In production, this model pushes teams toward explicit engineering tradeoffs: context budgets, output budgets, latency variance, retries, and guardrails. If you hardcode it everywhere, you'll either overspend or violate SLOs.

This guide is deliberately practical: long-context patterns, schema constraints, async execution, cost envelopes, and rollout gates. We'll be explicit about what's confirmed and what's workload-dependent.

The Engineering Shift: Why This Model Changes "Default Architectures"

A lot of teams evaluate frontier models like they're libraries: upgrade the version, run tests, ship. That mindset breaks in production when your "library" is also your biggest source of variable latency and variable cost.

With this release, the critical change isn't "it's smarter." The change is that it makes long context and large outputs first-class, and OpenAI also exposes reasoning tokens as a concept with explicit billing and context implications.

That combination pushes production teams toward an operator's framing:

- You don't "call the model." You run a bounded execution with budgets, validation, and stop conditions.

- You don't measure "average latency." You manage distributions (p50/p95/p99), and you plan for tail amplification when prompts get large.

- You don't track "cost per request." You track cost per successful task because retries and tool loops change everything.

Currently documented GPT-5.2 limits

This section contains only specs you can point to without "benchmark blog hearsay."

Context Window, Output Limit, and Knowledge Cutoff

From OpenAI's model documentation for GPT-5.2:

- Context window: 400,000 tokens

- Max output tokens: 128,000

- Knowledge cutoff: August 31, 2025

These three numbers define your operational boundaries:

- 400k context makes it tempting to throw entire repositories into a single call. That works—until your tail latency and cost explode.

- 128k output makes it tempting to ask for multi-thousand-line outputs. That works—until you discover your system lacks cancellation.

- Aug 31, 2025 means you can't assume up-to-date facts post-cutoff without retrieval or browsing.

Reasoning Tokens: The Hidden Variable You Must Budget

OpenAI explicitly states that reasoning tokens are not visible via the API, but they still occupy context window space and are included in billable output usage.

This is easy to miss and painful to learn late. Even if your application only prints a short answer, internal reasoning can increase output token accounting. In production, that means:

- Output cost can exceed "visible text cost"

- Context pressure can exceed "visible prompt + visible output"

- Budgeting needs to be conservative, especially for long-context tasks

Long-Running Generations Are Real (Design for Async)

OpenAI notes that some complex generations (e.g., spreadsheets or presentations) can take many minutes.

You don't need a "TTFT chart" to make this actionable. "Many minutes" is enough to require:

- Async job orchestration

- Progress reporting and partial outputs

- Cancellation

- Idempotency keys

- Timeouts per route

Long-Context Systems: Design Patterns That Keep Production Predictable

A 400k context window expands what's possible, but it doesn't remove the laws of production systems. "Large context" behaves like "large payload" everywhere else.

Don't Treat Context as a Dump. Treat It as a Budget.

Long context is not "free accuracy." It's a trade: more evidence can improve correctness, but more tokens increase variability.

A practical approach is to allocate token budgets the way you allocate CPU/memory:

- System + policy prefix: Fixed and cacheable

- Retrieved evidence: Bounded and ranked

- Task instructions: Short and precise

- Tool outputs: Summarized before reinjection

- User history: Windowed, not infinite

Retrieval Discipline Beats Raw Context Length

If you have RAG, the winning move is not "stuff more." It's "stuff better."

Production recommendations:

- Rank by utility, not by recency

- Keep evidence atomic: short chunks that answer one question

- Always include source identifiers (doc id, timestamp)

- Summarize evidence into task-oriented bullets

The "Two-Pass Long-Context" Pattern

For large corpora (ticket histories, transcripts, repo diffs), use a two-pass design:

- Map phase: Chunk → summarize into structured units

- Reduce phase: Combine summaries → answer with bounded output

This pattern reduces tail latency, improves debuggability, and makes it easier to cache intermediate summaries.

Reliability Reality: Schema, Tools, Drift, and the Failure Taxonomy

The majority of "model incidents" are actually contract incidents. The model did something plausible—but your system needed something specific.

Treat Structure as a Contract, Not a Suggestion

For tasks like extraction, routing decisions, or tool invocation:

- Use JSON schema (or strict key/value formats)

- Validate every output before using it

- Implement a single "repair pass" if validation fails

A reliable pattern:

- Generate JSON with strict instructions

- Validate against schema

- If invalid, run one repair prompt

- If still invalid, fail gracefully

Tool Safety: Deterministic Wrappers, Not "Model Magic"

Even if GPT-5.2 is strong at planning, tool safety must be system-enforced:

- Allowlist tools by route

- Validate parameters and ranges

- Add idempotency keys

- Sandbox side-effect tools

- Log tool calls for audit

Benchmarks & Tradeoffs: SWE-bench Deltas You Can Cite

OpenAI reports the following:

- SWE-Bench Pro (public): 55.6%

- SWE-bench Verified: 80.0%

- SWE-Bench Pro (public): 50.8%

- SWE-bench Verified: 76.3%

Interpretation for Production Code Workflows

The delta is meaningful enough to justify evaluation for coding agents and code assistance workflows. But SWE-bench improvements don't remove the need for tests, gates, and rollback.

Pricing: Unit Economics, Caching, and Budget Envelopes

When teams say "the model is expensive," they usually mean they didn't cap output, didn't cache stable prefixes, and retries multiplied their usage.

Official Pricing

For gpt-5.2, OpenAI's pricing shows:

- Input: $1.75 / 1M tokens

- Cached input: $0.175 / 1M tokens (90% discount)

- Output: $14.00 / 1M tokens

Practical Cost Controls

- Cache stable prefixes (system prompts, policies, schemas, tool descriptions)

- Cap output and retries (reasoning tokens bill as output)

- Summarize tool outputs before reinjecting

- Track cost per successful task, not cost per request

EvoLink: Unified API + Lower Costs

EvoLink helps teams adopt this model with two concrete values: unified integration and lower effective cost.

Unified API: Integrate Once, Evolve Across Models

Instead of binding your application to one provider SDK, EvoLink gives you:

- One base_url

- One authentication surface

- Consistent interface across models

This keeps GPT-5.2 adoption from turning into a dependency trap.

Lower Effective Cost: Wholesale Pricing + Simplified Billing

Unit economics can be challenging at scale. EvoLink's positioning:

- Consolidate usage through a single gateway

- Benefit from wholesale/volume pricing dynamics

- Simplify billing and cost attribution across teams

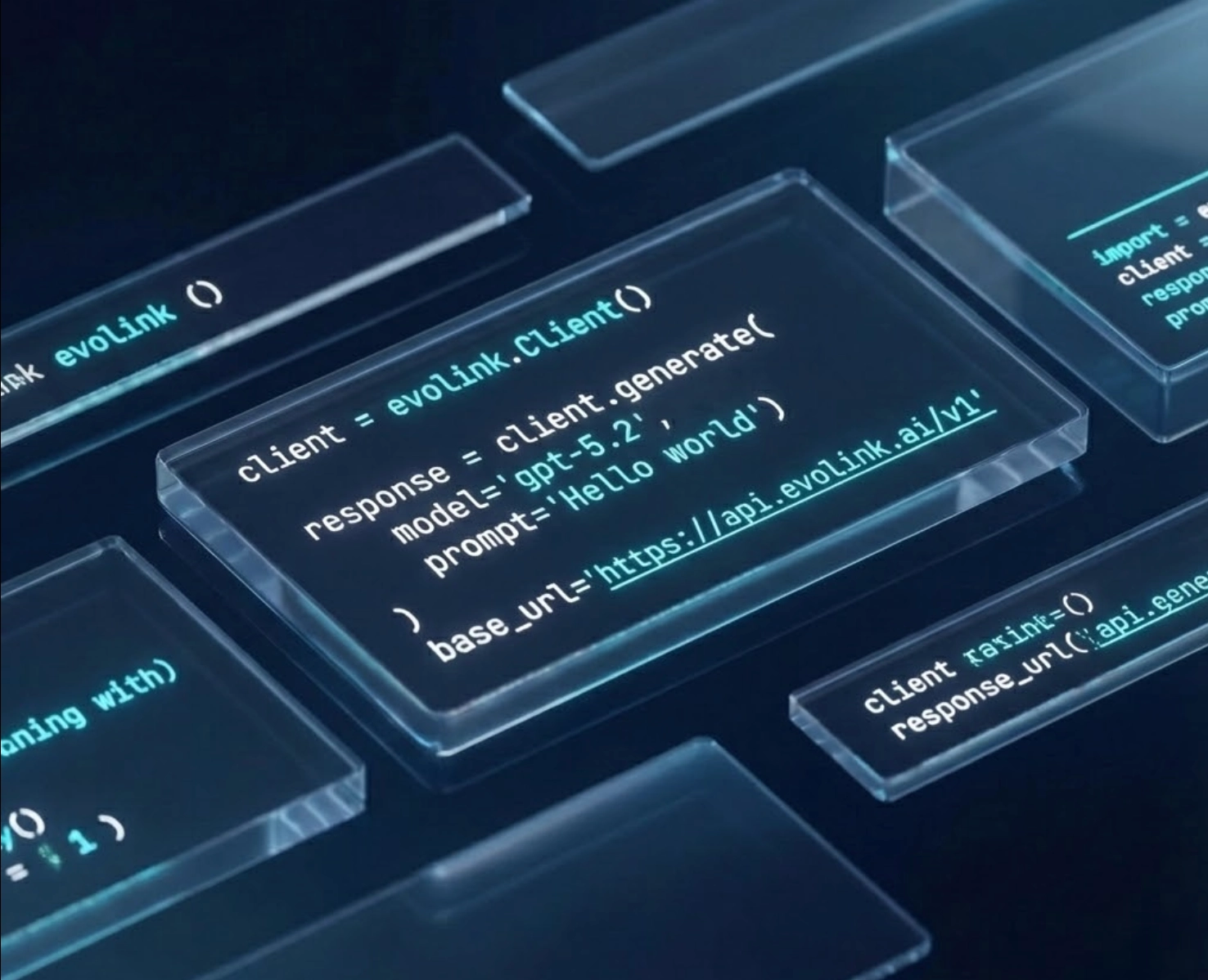

Implementation: Using EvoLink

Python— GPT-5.2 via EvoLink

import requests

url = "https://api.evolink.ai/v1/chat/completions"

payload = {

"model": "gpt-5.2",

"messages": [

{

"role": "user",

"content": "Hello, introduce the new features of GPT-5.2"

}

]

}

headers = {

"Authorization": "Bearer <token>",

"Content-Type": "application/json"

}

response = requests.post(url, json=payload, headers=headers)

print(response.text)

cURL — GPT-5.2 via EvoLink

curl --request POST \

--url https://api.evolink.ai/v1/chat/completions \

--header 'Authorization: Bearer <token>' \

--header 'Content-Type: application/json' \

--data '

{

"model": "gpt-5.2",

"messages": [

{

"role": "user",

"content": "Hello, introduce the new features of GPT-5.2"

}

]

}

'Decision Matrix: When GPT-5.2 Is Worth It

| Workload | Latency Sensitivity | Failure Cost | Recommendation |

|---|---|---|---|

| Classification / Tagging | High | Low | Use faster/cheaper tier |

| Customer-facing Chat | High | Medium | Fast tier default; escalate to GPT-5.2 |

| Long-context Synthesis | Medium | Medium/High | GPT-5.2 with compaction + caps |

| Tool-driven Workflows | Medium | High | GPT-5.2 with deterministic tools |

| High-stakes Deliverables | Low | High | GPT-5.2; async jobs for long work |

Production Rollout Checklist

Observability & Budgets

- Log: prompt_tokens, output_tokens, retries, tool_calls, schema_pass

- Track: p50/p95/p99 latency, timeout_rate, cancel_rate

- Add: cost per successful task (by route)

- Cap: max output tokens; retry budget; tool-call limits

- Implement: idempotency keys for retriable operations

Reliability Gates

- Schema validation on every structured output

- One repair pass on schema failure

- Loop detection for tool workflows

- State compaction for long conversations

Rollout Plan

- Shadow traffic and compare success/cost/latency

- Gradual ramp: 1% → 5% → 25% → 50% → 100%

- Rollback triggers: p95 breach, schema failures spike, cost/task spike

- Runbooks: timeouts, rate limits, partial outages

FAQ

What is the GPT-5.2 context window?

What is the GPT-5.2 max output?

What is GPT-5.2 pricing?

$1.75/1M input, $0.175/1M cached input (90% discount), $14/1M output.

Are reasoning tokens billed?

Yes—in practice, reasoning tokens are not visible in the API response, but they occupy context and contribute to output-side billing.

Does OpenAI provide a universal TTFT for GPT-5.2?

Not as a single number applicable across workloads. OpenAI does note complex generations can take many minutes.

Does GPT-5.2 have published SWE-bench deltas?

How do I get started with GPT-5.2 on EvoLink?

Conclusion

From an operator’s perspective, GPT-5.2 is best treated as a bounded execution engine with budgets and contracts. Use EvoLink when you want a unified API surface and cheaper effective pricing as you scale usage across services.

The future of production AI is not about finding the one "best" model, but about building a flexible, intelligent, and cost-aware system that routes tasks to the right model for the job.