What's confirmed vs. what's just rumor

A quick reality-check table

| Topic | What we can cite today | What's still uncertain | Why you should care |

|---|---|---|---|

| Release window | "Expected" in mid‑February (reporting via The Information) | Exact date/time, staged rollout, regional availability | Impacts launch planning + on-call readiness [DeepSeek to launch new AI model focused on coding in February, The Information reports |

| Primary focus | Strong coding capabilities + handling very long code prompts | Benchmarks, real SWE workflows, tool-use behavior | Determines whether it replaces your current coding model [DeepSeek to launch new AI model focused on coding in February, The Information reports |

| Performance claims | "Internal tests suggested" it could outperform some rivals | Independent verification, robustness, regression profile | You'll want reproducible evals before switching [DeepSeek to launch new AI model focused on coding in February, The Information reports |

| Social proof | Reddit is actively discussing V4 timing + expectations | Many posts are second-hand summaries | Useful for "what devs want," not for truth r/LocalLLaMA on Reddit: DeepSeek V4 Coming |

Why DeepSeek V4 is trending on Reddit (and what devs actually want)

-

Repo-scale context, not toy snippets The Reuters report highlights breakthroughs in handling "extremely long coding prompts," which maps directly to day-to-day work: large diffs, multi-file refactors, migrations, and "explain this legacy module" tasks. DeepSeek to launch new AI model focused on coding in February, The Information reports | Reuters

-

Switching costs are now the bottleneck Most teams can try a new model in an afternoon. The hard part is: auth, rate limits, request/response quirks, streaming differences, tool calling formats, cost accounting, and fallbacks. That's why "gateway / router" patterns keep coming up in infra circles.

-

The "OpenAI-compatible" promise is helpful—but incomplete Even if two providers claim OpenAI compatibility, production differences often show up in tool calling, structured outputs, error semantics, and usage reporting. That mismatch is exactly where teams burn time during "simple" migrations.

How to prepare for DeepSeek V4 before it launches (practical checklist)

You don't need the model to be released to get ready. You need a plan that reduces adoption to a configuration change.

1) Put an LLM Gateway / Router in front of your app

Minimum capabilities to require:

- Per-request routing (by task type: "unit tests", "refactor", "chat", "summarize logs")

- Fallbacks (provider outage, rate limit, degraded latency)

- Observability (latency, error rate, tokens, $ cost)

- Prompt/version control (so you can rollback quickly)

2) Define a "V4 readiness" eval set (small, ruthless, repeatable)

- One real bug ticket your team struggled with

- A multi-file refactor with tests

- A "read this module + propose safe changes" task

- A long-context retrieval scenario (docs + code + config)

3) Decide what "better" means (before you test)

Pick 3–5 acceptance metrics:

- Patch compiles + tests pass (yes/no)

- Time-to-first-correct PR

- Hallucination rate on API usage

- Token/cost per resolved issue

- Latency p95 for your typical prompt size

A lightweight integration template (OpenAI-style, model-agnostic)

# Pseudocode: keep your app stable; swap providers/models behind a gateway.

payload = {

"model": "deepseek-v4", # placeholder

"messages": [

{"role": "system", "content": "You are a coding assistant. Prefer small diffs and add tests."},

{"role": "user", "content": "Refactor this function and add unit tests..."}

],

"temperature": 0.2,

}

resp = llm_client.chat_completions(payload) # your internal abstractionWhat we'll do on the EvoCode side

"Watch list" for the launch week (what to monitor in real time)

| Signal to watch | Why it matters | What to do immediately |

|---|---|---|

| Official model identifier(s) + API docs | Prevents brittle assumptions | Update router config + contracts |

| Context limits actually exposed by providers | Long-prompt claims only help if you can use them | Add automatic prompt sizing + chunking |

| Rate limits / capacity | Launch week often means throttling | Turn on fallbacks + queueing |

| Pricing and token accounting fields | Needed for budget & regression tracking | Compare cost-per-task vs your baseline |

FAQ (based on what people are asking)

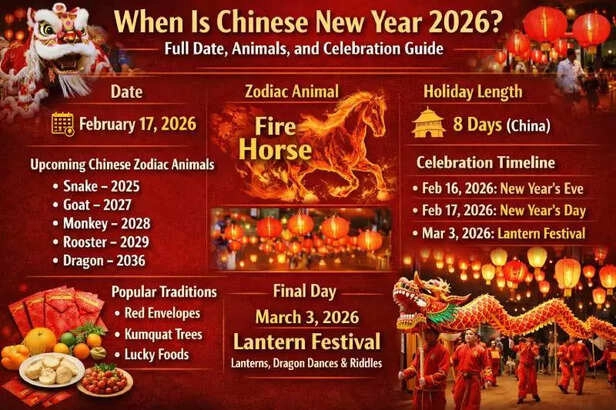

Optional: Lunar New Year timing context (illustrative)