Nano Banana 2 Is Here: Gemini 3.1 Flash Image API Access Guide

Nano Banana 2 officially launched today. Google published the official announcement. API is now available in preview via AI Studio and Gemini API. This guide has been fully updated with confirmed specs, official pricing, and API integration instructions.

TL;DR

- Nano Banana 2 is officially launched — it's Gemini 3.1 Flash Image, announced by Google today

- Pro quality at Flash speed — advanced world knowledge, subject consistency (up to 5 characters + 14 objects), precision text rendering, all at Flash-tier speed

- Resolution: 512px to 4K — full range confirmed by Google

- API available in preview — via AI Studio and Gemini API, also on Vertex AI

- EvoLink supports it now — just switch the model name and enjoy better pricing

- Official Launch: What Google Announced

- Nano Banana 2 vs Nano Banana Pro vs Nano Banana: Full Comparison

- What's New in Nano Banana 2

- Pricing

- API Integration Guide — Get Started Now

- Nano Banana 2 Capabilities Showcase

- Limitations & Workarounds

- FAQ

- Join the community challenge — win $1,000 in EvoLink credits

- References & Update Log

1) Official Launch: What Google Announced

Google officially launched Nano Banana 2 on February 26, 2026. Here's the confirmed rollout:

Now Available ✅

| What | Where | Details |

|---|---|---|

| Gemini App | Global | NB2 replaces NB Pro as default across Fast, Thinking and Pro models |

| Google Search | AI Mode + Lens | 141 new countries/territories, 8 additional languages |

| AI Studio + API | Preview | Model: gemini-3.1-flash-image-preview |

| Vertex AI | Preview | Via Gemini API |

| Flow | All users | Zero credits — free to use |

| Google Ads | Available | Powers campaign creative suggestions |

Key Detail: Nano Banana Pro Still Accessible

Google AI Pro and Ultra subscribers can still access Nano Banana Pro for specialized high-fidelity tasks by regenerating images via the three-dot menu in the Gemini app.

2) Nano Banana 2 vs Nano Banana Pro vs Nano Banana: Full Comparison

| Feature | Nano Banana (2.5 Flash Image) | Nano Banana Pro (3 Pro Image) | Nano Banana 2 (3.1 Flash Image) |

|---|---|---|---|

| Architecture | Gemini 2.5 Flash | Gemini 3 Pro | Gemini 3.1 Flash |

| Resolution Range | Up to 1K (1024×1024) | Up to 4K (4096×4096) | 512px to 4K (confirmed) |

| Generation Speed | ~3 seconds | 8-12 seconds | Flash-speed (significantly faster than Pro) |

| Text Rendering | ~80% accuracy | 94% accuracy | Sharper than Pro (confirmed by Google + community) |

| Subject Consistency | Fair | High | Up to 5 characters + 14 objects (confirmed) |

| World Knowledge | Limited | Advanced | Advanced + web search grounding |

| Text Translation | No | Limited | Yes — in-image localization |

| Positioning | Fast & cheap | Professional & high quality | Best of both: Pro quality at Flash speed |

| API Status | ✅ GA | ✅ Preview | ✅ Preview (launched Feb 26, 2026) |

| EvoLink Support | ✅ Available | ✅ Available | ✅ Available |

Why Nano Banana 2 Matters

Nano Banana 2 sits in a sweet spot that didn't exist before:

- For high-volume use cases (e-commerce, social media, ad creatives): You needed Pro quality but couldn't afford Pro latency and cost at scale. Nano Banana 2 solves this.

- For real-time applications (chatbots, interactive tools): Original Nano Banana was fast but quality-limited. Nano Banana 2 brings near-Pro quality at Flash speed.

- For developers: Same Flash-tier pricing, dramatically better output. Your cost per quality-unit drops significantly.

3) What's New in Nano Banana 2

Confirmed by Google's official announcement:

512px to 4K Resolution

Full production-ready resolution range. Create assets for any format — from vertical social posts to wide-screen backdrops — with complete control over aspect ratios and resolutions.

Advanced World Knowledge + Web Search Grounding

The model pulls from Gemini's real-world knowledge base and is powered by real-time information and images from web search. This enables:

- Accurate rendering of specific real-world subjects

- Infographics and diagrams from notes

- Data visualizations

- Factually grounded creative content

Precision Text Rendering & Translation

Generate accurate, legible text in images — and translate/localize text within images to share ideas globally. Game-changer for:

- Marketing mockups and ad creatives

- Greeting cards and invitations

- UI mockups and wireframes

- Multi-language product packaging

Subject Consistency at Scale

- Storyboarding and narrative building

- Brand character consistency across campaigns

- Product catalogs with consistent styling

Enhanced Instruction Following

The model adheres more strictly to complex requests, capturing specific nuances so the image you get is the image you asked for.

Visual Fidelity Upgrade

Vibrant lighting, richer textures, and sharper details — maintaining high-quality aesthetics at Flash speed.

AI Content Provenance

All generated images include SynthID watermarking plus C2PA Content Credentials for transparent AI content identification.

4) Pricing

| Free Tier | Paid Tier | |

|---|---|---|

| Text input/output | Same as 2.5 Flash | Same as 2.5 Flash |

| Image output | Rate-limited free access | $30 per 1M tokens |

| Model | Pricing Model | Approx. Cost per Image | Speed |

|---|---|---|---|

| Nano Banana 2 | $30/M output tokens | Competitive with Flash tier | Fast |

| Nano Banana Pro | Per-token | Higher than Flash | 8-12 sec |

| Nano Banana (original) | $0.039/image (1K) | Cheapest | ~3 sec |

| DALL-E 3 | $0.016-0.080/image | Varies by quality | 15-25 sec |

💡 Via EvoLink: Access Nano Banana 2 with automatic fallback, budget controls, and unified billing across all image models. Check EvoLink pricing.

5) API Integration Guide — Get Started Now

Nano Banana 2 API is live in preview. Here's how to start generating images today.

Why EvoLink for Nano Banana 2?

- Already available: Nano Banana 2 is live on EvoLink

- One-line switch: Just change the model name to

gemini-3.1-flash-image-preview— everything else stays the same - Better pricing: More competitive rates than official pricing

- Automatic fallback: If NB2 has availability issues, EvoLink routes to Nano Banana Pro automatically

- Cost controls: Set budgets and rate limits to manage spend

Step 1 — Get Your API Key

Step 2 — Generate an Image (Python)

import os

import time

import requests

API_KEY = os.environ["EVOLINK_API_KEY"]

BASE_URL = "https://api.evolink.ai/v1"

MODEL = "gemini-3.1-flash-image-preview"

# Step 1: Submit image generation task

response = requests.post(

f"{BASE_URL}/images/generations",

headers={

"Authorization": f"Bearer {API_KEY}",

"Content-Type": "application/json"

},

json={

"model": MODEL,

"prompt": "A professional product photo of a smartwatch on a dark reflective surface, studio lighting",

"quality": "2K",

"size": "1:1"

}

)

task = response.json()

task_id = task["id"]

print(f"Task submitted: {task_id}")

# Step 2: Poll for completion

while True:

status_resp = requests.get(

f"{BASE_URL}/tasks/{task_id}",

headers={"Authorization": f"Bearer {API_KEY}"}

)

result = status_resp.json()

if result["status"] == "completed":

print(f"Image URL: {result['output']['image_url']}")

break

elif result["status"] == "failed":

print(f"Task failed: {result.get('error', 'Unknown error')}")

break

time.sleep(3)Step 3 — Node.js Implementation

const API_KEY = process.env.EVOLINK_API_KEY;

const BASE_URL = 'https://api.evolink.ai/v1';

const MODEL = 'gemini-3.1-flash-image-preview';

// Step 1: Submit task

const taskRes = await fetch(`${BASE_URL}/images/generations`, {

method: 'POST',

headers: {

'Authorization': `Bearer ${API_KEY}`,

'Content-Type': 'application/json',

},

body: JSON.stringify({

model: MODEL,

prompt: 'A flat lay infographic explaining the water cycle, clean educational style',

quality: '2K',

size: '16:9',

}),

});

const task = await taskRes.json();

console.log(`Task submitted: ${task.id}`);

// Step 2: Poll for completion

while (true) {

const res = await fetch(`${BASE_URL}/tasks/${task.id}`, {

headers: { 'Authorization': `Bearer ${API_KEY}` },

});

const result = await res.json();

if (result.status === 'completed') {

console.log(`Image URL: ${result.output.image_url}`);

break;

}

if (result.status === 'failed') {

console.error('Task failed:', result.error);

break;

}

await new Promise(r => setTimeout(r, 3000));

}Step 4 — cURL

# Submit image generation task

curl -X POST https://api.evolink.ai/v1/images/generations \

-H "Authorization: Bearer $EVOLINK_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"model": "gemini-3.1-flash-image-preview",

"prompt": "A cyberpunk cityscape at night, neon reflections on wet streets, cinematic",

"quality": "2K",

"size": "16:9"

}'

# Poll task status

curl https://api.evolink.ai/v1/tasks/TASK_ID_HERE \

-H "Authorization: Bearer $EVOLINK_API_KEY"Image Editing with Reference Images

Nano Banana 2 supports image-to-image editing by passing reference images:

response = requests.post(

f"{BASE_URL}/images/generations",

headers={

"Authorization": f"Bearer {API_KEY}",

"Content-Type": "application/json"

},

json={

"model": "gemini-3.1-flash-image-preview",

"prompt": "Transform this photo into a watercolor painting style",

"image_urls": [

"https://example.com/your-photo.jpg"

],

"quality": "2K"

}

)Note: Maximum 10 reference images per request (each ≤10MB). Supported formats: JPEG, PNG, WebP. Maximum 5 real person face images.

Async Callback (Optional)

Instead of polling, provide a callback URL to receive results automatically:

response = requests.post(

f"{BASE_URL}/images/generations",

headers={

"Authorization": f"Bearer {API_KEY}",

"Content-Type": "application/json"

},

json={

"model": "gemini-3.1-flash-image-preview",

"prompt": "A serene Japanese garden in autumn",

"quality": "2K",

"callback_url": "https://your-domain.com/webhooks/image-complete"

}

)The callback fires when the task is completed, failed, or cancelled. HTTPS only, max 3 retries on failure.

6) Nano Banana 2 Capabilities Showcase

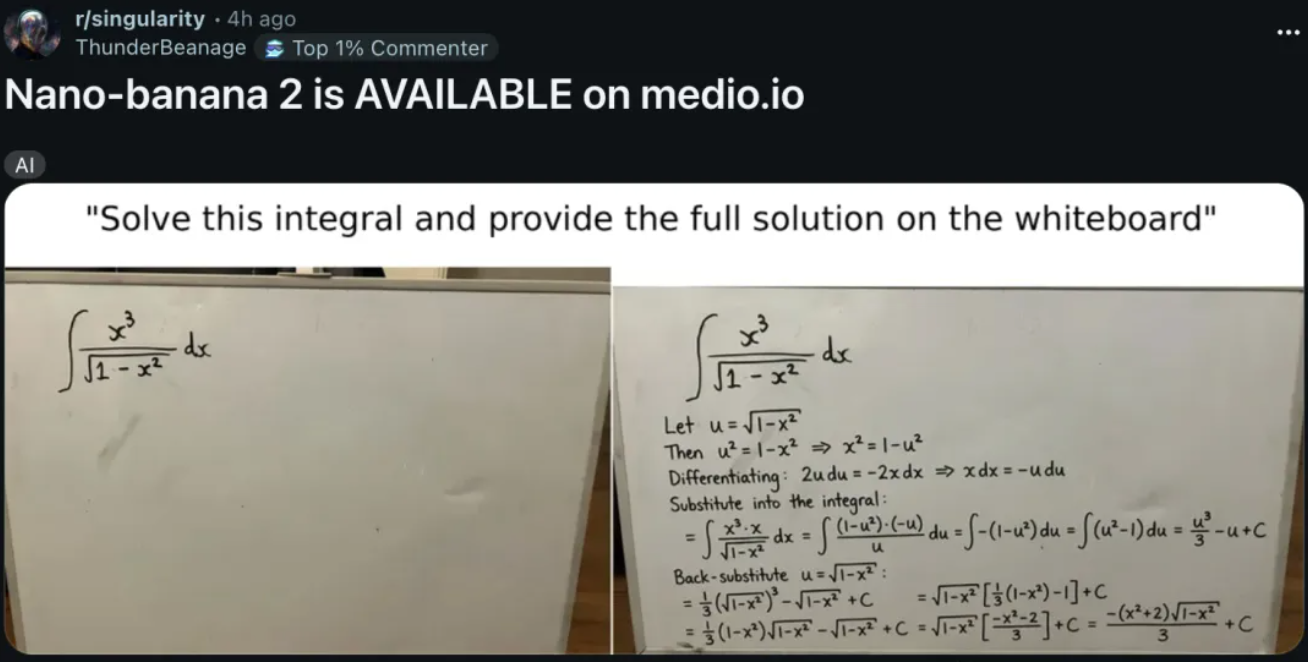

6.1 Infographics & Data Visualization (NEW)

One of the standout new capabilities. Nano Banana 2 can turn notes into diagrams, create infographics, and generate data visualizations — powered by its advanced world knowledge and web search grounding.

6.2 Photorealistic Portraits & Characters

A significant leap in character realism:

- Facial features with realistic eye reflections and micro-expressions

- Skin rendering with natural subsurface scattering

- Hair physics with individual strand detail

- Clothing textures with accurate fabric draping

Comparison: Nano Banana 2 (left) vs Nano Banana (right) — notice the dramatic improvement in photorealistic quality

6.3 Text-Heavy Content

Nano Banana 2 generates images with accurate, readable text — including multi-line paragraphs, marketing copy, and localized translations. This was a major weakness of the original Nano Banana.

Nano Banana 2 demonstrating precision text rendering — generating complete, readable text content

6.4 Anime & Stylized Art

Exceptional quality in stylized content:

- Perfect execution of complex action poses

- Accurate facial expressions matching prompts

- Sophisticated light effects and energy rendering

- Consistent character design across multiple generations

Nano Banana 2's anime generation: showcasing perfect action pose and lighting effects

6.5 Creative & Surreal Concepts

Excels at material rendering for impossible concepts:

- Transparent and glass-like materials with realistic refraction

- Complex environmental reflections on curved surfaces

- Seamless blending of photorealistic and surreal elements

7) Limitations & Workarounds

| Limitation | Workaround |

|---|---|

| Occasional text typos in images | Keep phrases short; use inpainting for fixes |

| Hand anatomy inconsistencies | Position hands away from camera; use clear pose descriptions |

| Character drift across generations | Reuse same seed; include signature traits in every prompt |

| Cost during experimentation | Start with lower resolutions; cache successful prompts; upscale only finals |

💡 Nano Banana 2's improvements in text rendering and character consistency mean you'll encounter these less frequently than with previous models.

8) FAQ

Is Nano Banana 2 officially released?

What's the model ID for API calls?

gemini-3.1-flash-image-preview — available now in AI Studio and Gemini API. On EvoLink, use the same model ID.How is it different from Nano Banana Pro?

Nano Banana 2 is built on Flash architecture (optimized for speed and cost), while Pro is built on Pro architecture (optimized for maximum quality). NB2 delivers near-Pro quality at Flash-tier speed and pricing. Google says it offers "the advanced world knowledge, quality and reasoning you love in Nano Banana Pro, at lightning-fast speed."

Will my existing EvoLink integration break?

nano-banana or nano-banana-pro integrations continue working unchanged. Nano Banana 2 is a new model option — switch by changing the model name to gemini-3.1-flash-image-preview.Can I use generated images commercially?

Follow Google's usage policy for Gemini image models. All images include SynthID watermarking and C2PA Content Credentials for AI content identification.

Does it support 4K?

Yes. Google confirmed resolution support from 512px to 4K.

How much does it cost?

What happened to Nano Banana Pro in the Gemini app?

Nano Banana 2 replaces Nano Banana Pro as the default. Google AI Pro and Ultra subscribers can still access Pro for specialized tasks via the regenerate option (three-dot menu).

9) Join the community challenge — win $1,000 in EvoLink credits

How to participate

- Post your image on Twitter/X and tag @evolinkai

- Collect likes (ties may consider retweets/comments)

- Email your tweet link + like‑count screenshot to [email protected]

- Prize: 1st place wins $1,000 EvoLink credits (non‑withdrawable; usable for API usage)

- Timeline: [START_DATE] – [END_DATE], [TIMEZONE]. Winners announced via @evolinkai and email

- Eligibility & content rules: You must own the rights to your inputs; no prohibited content; by submitting you grant EvoLink permission to showcase your work with attribution

10) References & Update Log

Sources

- Google Official Blog: Nano Banana 2 announcement — Feb 26, 2026

- Google AI Studio: Try Nano Banana 2

- Gemini API Image Generation Docs

- Gemini API Pricing

- Reddit r/Bard: Nano Banana 2 sightings — Feb 25, 2026

- Habr: Вышла Nano Banana 2 — Feb 26, 2026

Update log

- 2026‑02‑26: Major update — Nano Banana 2 officially launched by Google. Updated all sections with confirmed specs, official pricing, API availability, and launch details.

- 2025‑11‑09: Initial publication — early signals and preparation guide.