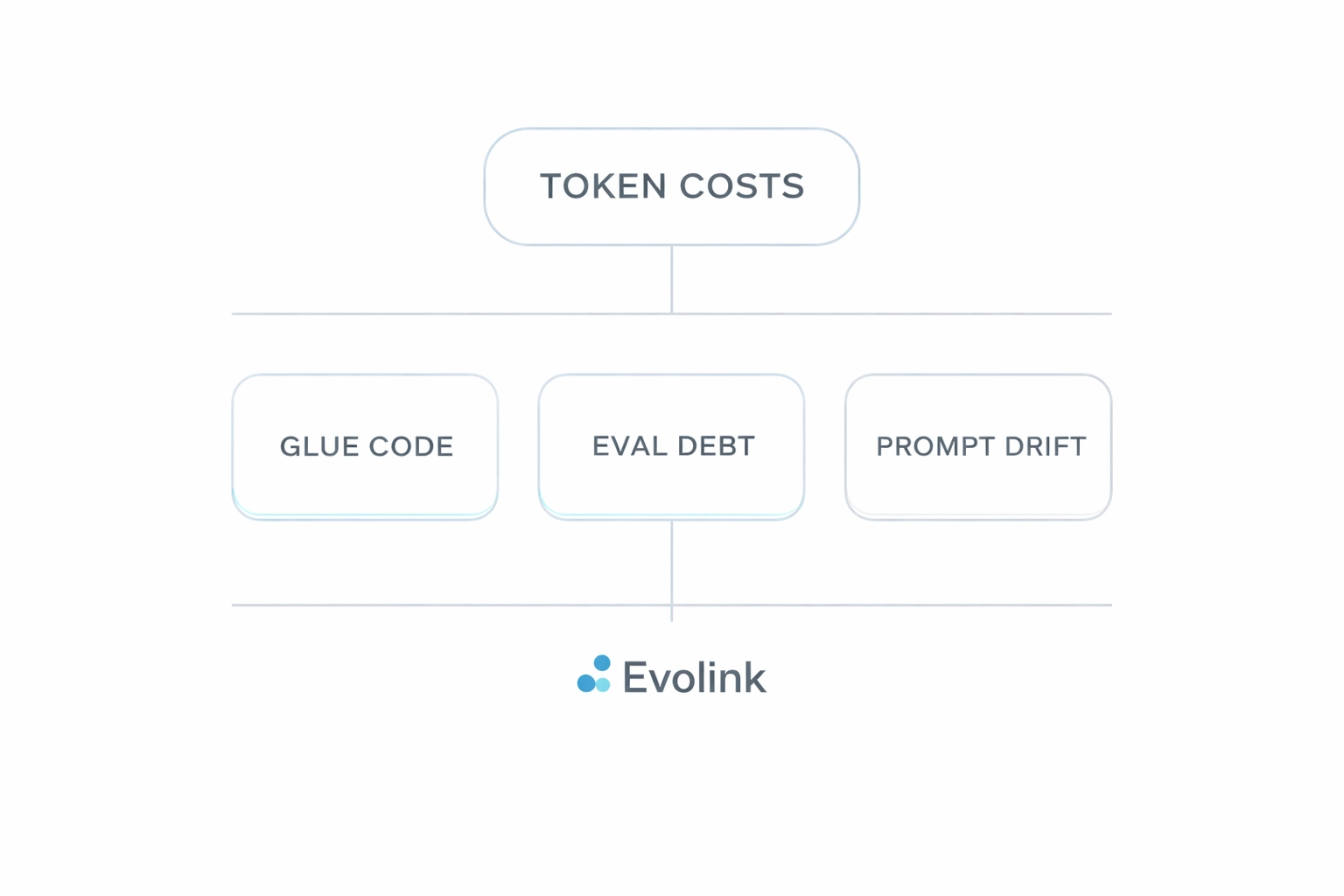

LLM TCO in 2026: Why Token Costs Are Only Part of the Real Price

Most teams estimate the cost of LLM features using a single metric: price per 1M tokens.

That metric matters — but only on paper.

In real production systems, LLM Total Cost of Ownership (TCO) is often driven not just by token spend, but by engineering overhead: integration work, reliability fixes, prompt maintenance, and evaluation gaps that quietly erode AI ROI over time.

This guide explains the hidden costs of LLM integration and provides a practical framework to identify where money and engineering time are actually going:

- Glue Code — the ongoing integration tax

- Eval Debt — the cost of uncertainty

- Prompt Drift — the migration that never ends

A 10-Minute LLM TCO Self-Audit

Before going deeper, answer these five questions:

- How many models or providers does your system support today (including planned ones)?

- Do you maintain provider-specific adapters or conditional branches?

- Do you run automated evaluations on every model change?

- Can you reroute traffic to another model without rewriting prompts or business logic?

- Do you have a single view of cost, latency, and failure rates?

Hidden Cost #1 — Glue Code: The Integration Tax

Glue code is engineering work that produces no user-facing value but is required to normalize differences between providers.

It grows in three predictable areas.

1) Usage & Context Management

Once multiple models are involved, usage accounting stops being uniform.

Common sources of glue code include:

- context-window calculation and truncation

- "safe max output" guards

- inconsistent or missing usage fields

Context overflow often causes retries, partial outputs, and unexpected spend — not just errors.

2) Reliability & Failure Normalization

Different APIs fail in fundamentally different ways:

- structured API errors vs. transport-level failures

- throttling vs. silent timeouts

- partial streaming vs. abrupt disconnects

This turns "just add retries" into a growing decision tree.

# Illustrative example: provider-agnostic failure normalization

def should_retry(err) -> bool:

if getattr(err, "status", None) in (408, 429, 500, 502, 503, 504):

return True

if "timeout" in str(err).lower() or "connection" in str(err).lower():

return True

return FalseThis code keeps systems alive — but adds nothing to product differentiation.

3) Tool Calling & Structured Outputs

The moment you rely on tools or strict JSON outputs, you are integrating a protocol, not a chat API.

Even APIs that accept similar request shapes can differ in:

- where tool calls appear in responses

- how arguments are encoded

- how strictly structured output is enforced

This is a direct consequence of LLM API fragmentation.

Glue Code Smell Test

You are paying an integration tax if:

- prompts fork by provider

- streaming parsers differ per model

- adapters multiply over time

- observability is provider-centric rather than feature-centric

Hidden Cost #2 — Eval Debt: The Cost of Uncertainty

Eval debt accumulates when teams deploy models without automated evaluation tied to real workflows.

The result is predictable:

- migrations feel risky

- cheaper or faster models go unused

- teams stick with expensive defaults

- AI ROI declines over time

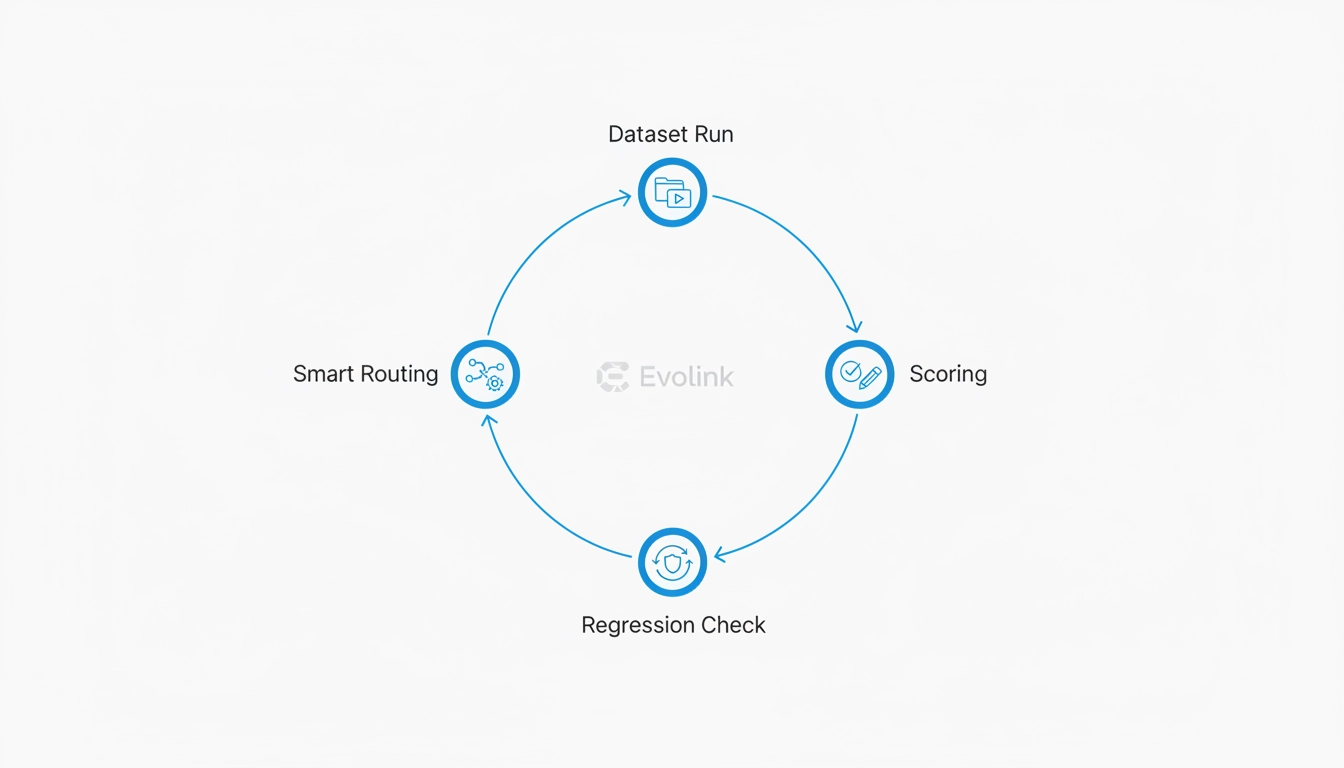

The Minimum Viable Eval Loop (MVEL)

You do not need a full MLOps platform to reduce eval debt.

You need a loop that answers one question:

If we change the model, will users notice?

A practical baseline many teams can implement in 1–2 days:

1) Small, Versioned Datasets (50–300 cases)

Use real production examples:

- common user flows

- edge cases

- historical failures

eval/

├── datasets/

│ ├── v1_core.jsonl

│ ├── v1_edges.jsonl

│ └── v1_failures.jsonl

2) Repeatable Batch Runner

One script that:

- runs the same dataset across models

- records outputs, latency, and cost

- runs locally or in CI

3) Lightweight Scoring (Regression-Focused)

At minimum, track:

- format validity

- required fields present

- latency and cost thresholds

4) Simple Eval Configuration

dataset: datasets/v1_core.jsonl

model_targets:

- primary

- candidate

metrics:

- format_validity

- required_fields

thresholds:

format_validity: 0.98

latency_p95_ms: 1200

report:

output: reports/diff.htmlThis structure alone dramatically lowers migration risk.

Hidden Cost #3 — Prompt Drift: The Migration That Never Ends

The most common misconception in LLM engineering is:

"We'll just swap the model ID later."

In practice, prompts drift because models differ in:

- formatting discipline

- tool-use behavior

- refusal thresholds

- instruction-following style

A Common Failure Pattern (Provider-Agnostic)

- Prompt requires strict JSON output

- Model A complies consistently

- Model B adds a short explanation or refusal sentence

- Downstream parsing fails

- Engineers patch prompts, parsers, or both

LLM TCO Iceberg: Where Costs Actually Come From

- Visible cost: Token pricing

- Hidden costs:

- Glue code maintenance

- Prompt drift remediation

- Eval infrastructure

- Debugging, retries, and rollbacks

Note on Multimodal Systems (Image & Video)

While this article focuses on LLM integration, the same TCO framework applies even more strongly to multimodal systems such as image and video generation.

Once you move beyond text, engineering overhead expands to include asynchronous job orchestration, webhooks or polling, temporary asset storage, bandwidth costs, timeout handling, and quality evaluation for non-deterministic outputs. In practice, these factors often outweigh per-unit pricing — whether the unit is tokens, images, or seconds of video.

This is why teams building production-grade image or video workflows frequently experience higher glue code and evaluation costs than pure text systems, even when model pricing appears cheaper on paper.

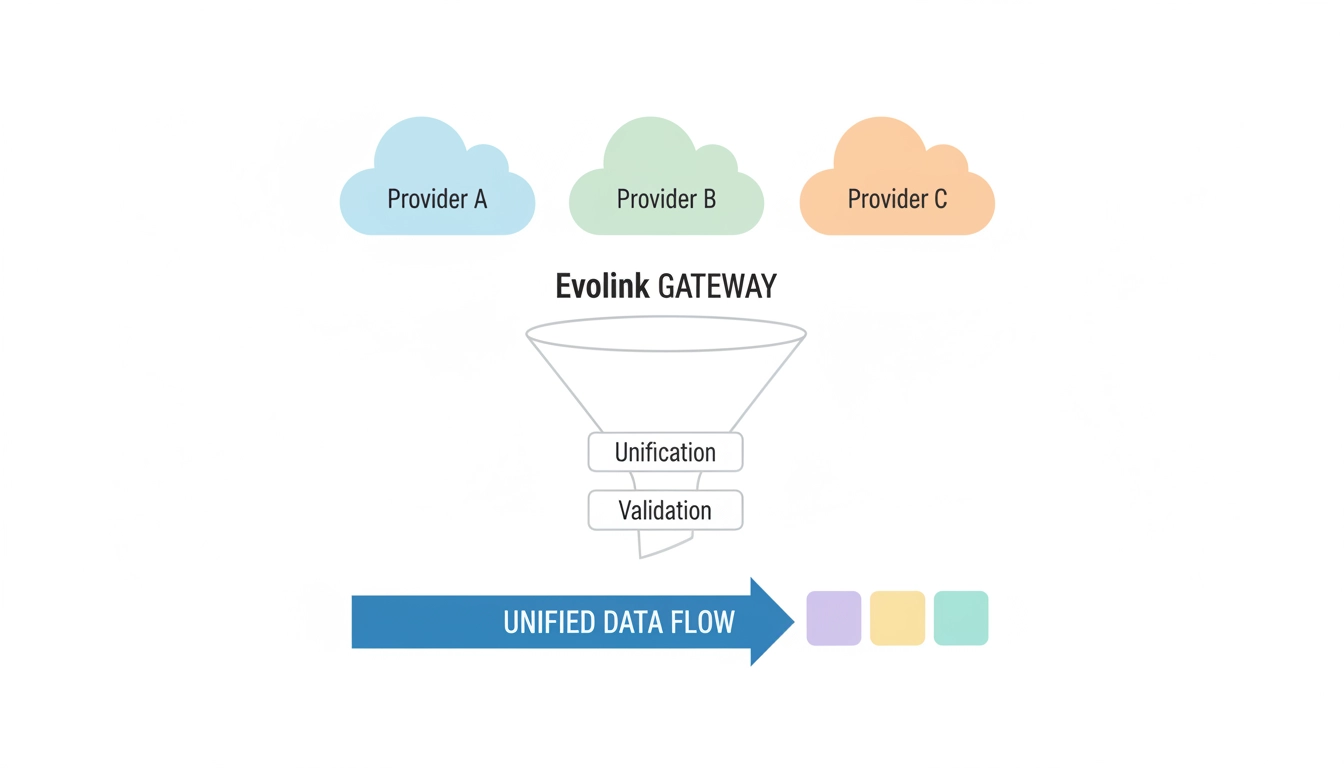

Direct Integration vs. Normalized Gateway

| Cost Area | Direct Integration | Normalized Gateway |

|---|---|---|

| Token cost | Low–variable | Low–variable |

| Integration effort | High | Lower |

| Maintenance | Continuous | Centralized |

| Migration speed | Slow | Faster |

| Observability | Fragmented | Unified |

| Engineering overhead | Repeated | Consolidated |

At this stage, the real decision isn't which model to use — it's where you want this complexity to live.

Leading teams move fragmentation, routing, and observability out of application code and into a dedicated gateway layer.

That architectural shift is exactly why Evolink.ai exists.

FAQ (Search-Optimized)

How do you calculate the hidden costs of LLM integration?

By accounting for engineering time spent on integration, evaluation, prompt maintenance, reliability fixes, and migrations — not just token spend.

What is the engineering overhead of multi-LLM strategies?

It includes glue code, prompt drift handling, evaluation infrastructure, and cross-provider observability.

What is eval debt in LLM systems?

Eval debt is the accumulated risk caused by deploying models without automated evaluation, making future changes slower and more expensive.

How does an LLM gateway improve AI ROI?

By centralizing normalization, routing, and observability, allowing teams to optimize or switch models without rewriting feature-level integration code.