If you use coding CLIs daily, setup friction becomes real cost: multiple API keys, different config formats, auth conflicts, and the classic 401/429/stream-stuck loop.

api.evolink.ai), with quick verification and a practical troubleshooting playbook.Want the shortest path? Use the dedicated integration guides (recommended):

Start here (fastest setup)

Pick your tool and follow the dedicated guide linked in the intro above.

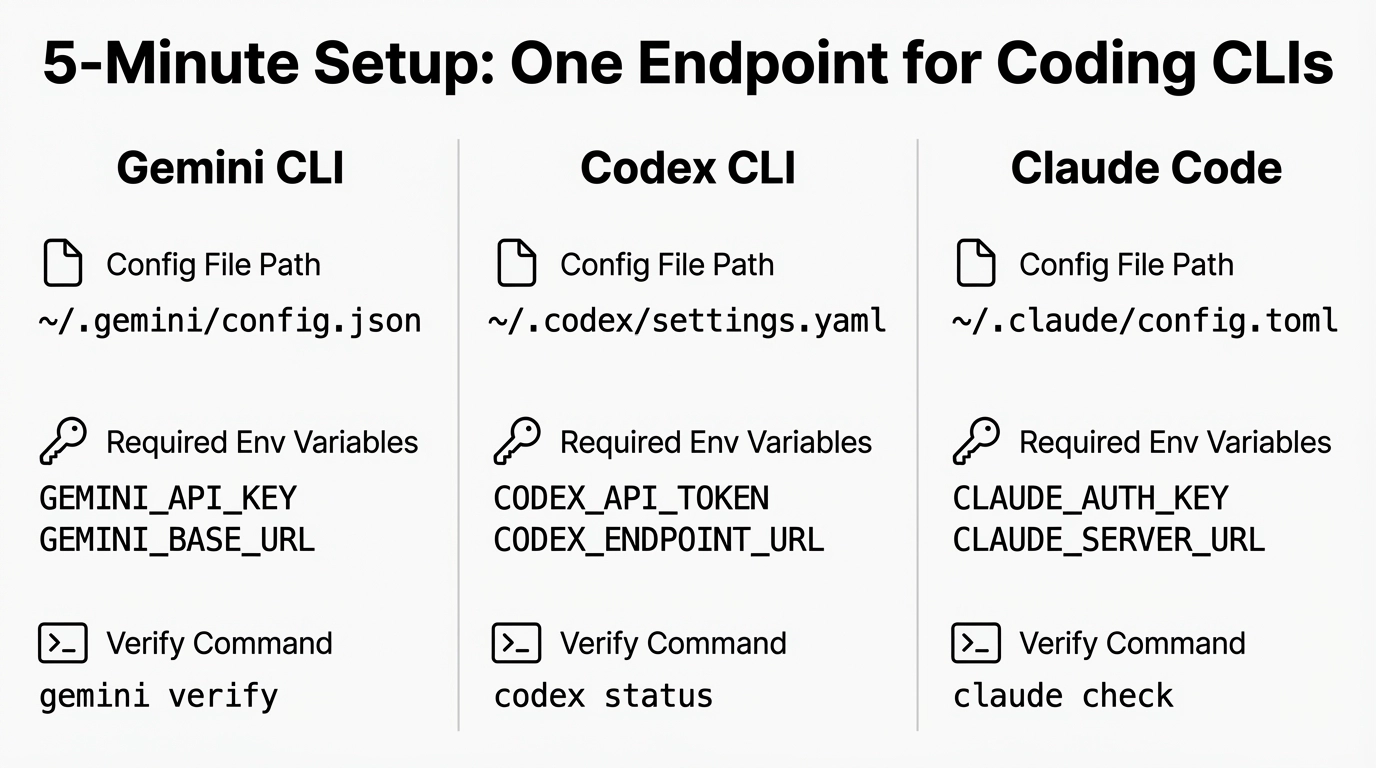

TL;DR: 5-minute checklist (pick your tool)

| Tool | Where to configure | Key / token | Base URL | Verify |

|---|---|---|---|---|

| Codex CLI | ~/.codex/config.toml | OPENAI_API_KEY | https://api.evolink.ai/v1 | codex "Who are you" |

| Claude Code | ~/.claude/settings.json | ANTHROPIC_AUTH_TOKEN | https://api.evolink.ai | claude "Who are you" |

| Gemini CLI | ~/.gemini/.env | GEMINI_API_KEY | https://api.evolink.ai/ | gemini "Who are you" |

Note: Some CLIs require slightly different endpoint shapes (e.g./v1or trailing slash). Follow each tool's guide for the exact format that CLI expects.

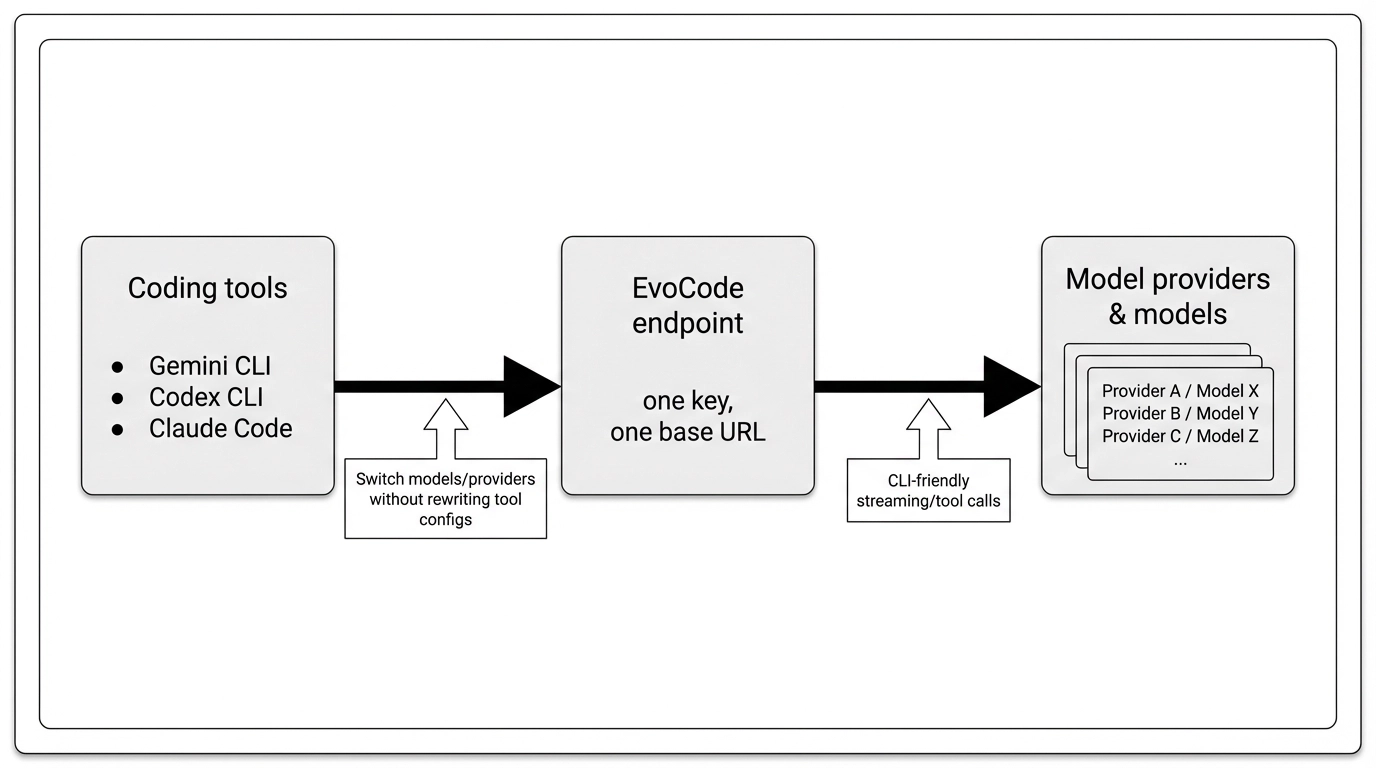

Why route coding CLIs through a gateway host?

Use a gateway/router when you want one or more of these:

- Stop juggling keys across multiple tools

- Switch models/providers without rewriting tool configs

- Route traffic through one place for operational controls (retries/timeouts/auditing)

- Keep your workflow stable as tools and providers evolve

This guide focuses on setup + operational reality: what breaks and how to fix it.

Decision table: direct-to-provider vs one gateway host

Before you commit, here's the real tradeoff in production.

| What you care about | Direct-to-provider CLIs | One gateway host (api.evolink.ai) |

|---|---|---|

| Setup across multiple tools | Repeated per tool | Standardized entry point |

| Switching models/providers | More rewiring | Easier to centralize and evolve |

| Observability (cost/latency/errors) | Fragmented across vendors | Can be unified at the gateway |

| Debugging (401/429/stream issues) | Tool-by-tool | Central patterns + per-tool adapters |

| Operational overhead | Lower infra responsibility | You operate/choose a gateway layer |

Path A — Codex CLI (custom provider via config.toml)

~/.codex/config.toml.Minimal steps

-

Install:

npm install -g @openai/codex -

Set your API key:

export OPENAI_API_KEY="YOUR_EVOLINK_KEY" -

Create / edit config

~/.codex/config.toml -

Verify:

codex "Who are you"

Minimal config.toml snippet (example)

model = "gpt-5.2"

model_provider = "evolink"

[model_providers.evolink]

name = "EvoLink API"

base_url = "https://api.evolink.ai/v1"

env_key = "OPENAI_API_KEY"

wire_api = "responses"Path B — Claude Code / Claude CLI (settings.json + Anthropic base URL)

~/.claude/settings.json.Minimal steps

-

Install:

npm install -g @anthropic-ai/claude-code -

Edit

~/.claude/settings.json -

Verify:

claude "Who are you"

Minimal settings.json snippet (example)

{

"env": {

"ANTHROPIC_AUTH_TOKEN": "YOUR_EVOLINK_KEY",

"ANTHROPIC_BASE_URL": "https://api.evolink.ai",

"CLAUDE_CODE_DISABLE_NONESSENTIAL_TRAFFIC": "1"

},

"permissions": { "allow": [], "deny": [] }

}Auth conflict note (common pitfall)

If you previously logged in using a subscription flow and also set an API key/token, behavior may be inconsistent. If you see warnings or unexpected auth behavior:

- Run

claude /logoutand re-auth cleanly, and/or - Unset conflicting Anthropic env vars, then restart your terminal

Path C — Gemini CLI (.env + custom base URL)

~/.gemini/.env.Prerequisite

Check:

node -vMinimal steps

-

Install:

npm install -g @google/gemini-cli -

Create / edit

~/.gemini/.env -

Verify:

gemini "Who are you"

Minimal .env snippet (example)

GOOGLE_GEMINI_BASE_URL="https://api.evolink.ai/"

GEMINI_API_KEY="YOUR_EVOLINK_KEY"

GEMINI_MODEL="gemini-2.5-pro"Known pitfall: base URL not taking effect

GOOGLE_GEMINI_BASE_URL:- Restart your terminal session

- Confirm the

.envfile path is correct - Check the CLI auth mode and cached sessions

- Re-run with a minimal prompt to isolate config vs prompt/runtime issues

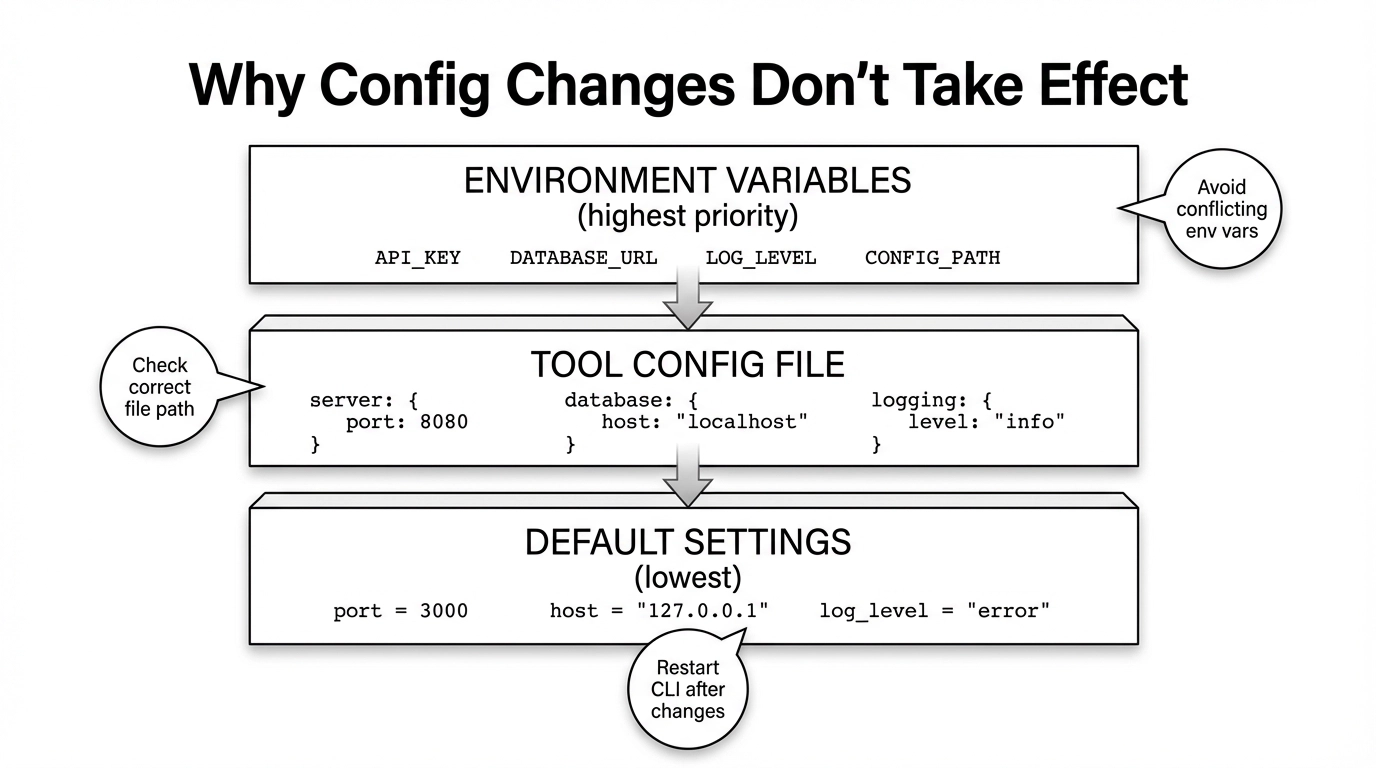

Why config changes don't take effect (precedence checklist)

Most "it didn't change" issues come from one of these:

-

Wrong file location — Codex:

~/.codex/config.toml/ Claude:~/.claude/settings.json/ Gemini:~/.gemini/.env -

You edited the file but didn't restart — Restart the terminal or the CLI process.

-

Environment variables override config — Especially relevant for Claude auth conflicts (token vs login).

Quick check:

env | grep -E "OPENAI_API_KEY|ANTHROPIC_|GEMINI_|GOOGLE_GEMINI_BASE_URL"

Troubleshooting cheat sheet (401/403/429/stream/tool-calls/timeout)

401 / 403 (auth errors)

- Is your key set in the variable the tool reads?

- Codex:

OPENAI_API_KEY - Claude:

ANTHROPIC_AUTH_TOKEN - Gemini:

GEMINI_API_KEY

- Codex:

- Does your base URL match the endpoint format used by your integration guide?

- Re-export the env var and restart your shell

- Re-check config file location and spelling

- For Claude:

/logout, then re-auth cleanly

Need a fresh key to resolve 401/403? Create / manage API key →

429 (rate limit / quota / throttling)

- Reduce concurrency (avoid many parallel runs)

- Add small delays between large tasks

- Retry with exponential backoff (best handled by a gateway/router, when available)

If 429 persists, treat it as an operational issue: burst patterns, long streaming sessions, or heavy tool-calls can amplify the problem.

Stream stuck / long output hangs

- Try a short prompt to validate connectivity

- Disable VPN/proxy temporarily to isolate network issues

- Re-run in a clean directory (avoid huge repo contexts)

Tool-calls fail (agent tries to run commands/files)

- Permission policy blocks execution

- Tool environment missing dependencies (git, ripgrep, build tools)

- Path/sandbox restrictions

- Confirm tool permission policy and working directory

- Reproduce with a minimal tool action

Timeout

- Split tasks into smaller prompts

- Reduce repo context size

- Avoid very long streaming sessions for a first test

Model switching (quick)

- Codex CLI: Update

model = "..."in~/.codex/config.toml - Claude Code: Use

/model(if supported by your version) - Gemini CLI: Use

/modelor updateGEMINI_MODELin.env

Next steps

If you're using multiple coding CLIs, the fastest way to reduce friction is to standardize on:

- A single gateway host

- Predictable setup templates per tool

- A repeatable troubleshooting playbook

Start with the dedicated integration guides (see intro above).

FAQ

What is a "custom LLM endpoint" for a coding CLI?

A custom endpoint is a base URL that your CLI sends requests to instead of a default provider endpoint. In practice, it can be a gateway/router that exposes one or more model APIs behind a single host.

Why does this guide show different endpoint formats (/v1, trailing slash) for different tools?

api.evolink.ai), while matching each CLI's expected endpoint format.Where is the Codex CLI config file located?

~/.codex/config.toml.How do I set a custom base_url in Codex CLI?

base_url under your custom provider section in config.toml.What does wire_api = "responses" mean in Codex config?

It indicates which API shape the CLI uses when talking to the endpoint. Keep it aligned with your integration guide.

Where is Claude Code settings.json located?

~/.claude/settings.json.What is ANTHROPIC_BASE_URL used for?

It sets the base URL Claude Code sends requests to, enabling routing through a custom endpoint instead of a default provider endpoint.

Why does Claude Code warn about auth conflicts?

/logout, unsetting conflicting env vars, and restarting the shell usually fixes it.Where does Gemini CLI read .env from?

~/.gemini/.env.Why doesn't GOOGLE_GEMINI_BASE_URL take effect?

.env path, terminal session didn't reload env vars, or cached auth/session. Restarting and re-checking auth mode helps.What Node.js version should I use for Gemini CLI?

Use Node.js 20+.

How do I fix 401/403 quickly when using a custom endpoint?

Verify the correct key variable is set, confirm the endpoint format, and restart the terminal. For Claude, also remove auth conflicts by logging out or unsetting variables.

What does 429 mean in coding CLIs?

It typically indicates rate limiting or quota throttling. Reduce concurrency, add delays, and retry with exponential backoff.

My CLI streams output then hangs—what should I try first?

Test with a short prompt, disable VPN/proxy temporarily, and reduce repo context size. Split large tasks into smaller prompts.